- Sep 3, 2025

New Book: Agentic AI Engineering for Building Production-Grade AI Agents

- Yi Zhou

- AEI Digest

- 0 comments

When I first published my article on Agentic AI Engineering, I was surprised by the response. Readers across industries resonated with the same frustration I had seen in my own work: agents that dazzled in demos but collapsed in production. That reaction further convinced me the idea was bigger than an article. It deserved a field guide.

Today, I’m excited to share that the book is here. Agentic AI Engineering: The Definitive Field Guide to Building Production-Grade Cognitive Systems is now available. You can

get a print copy on Amazon, or

download the eBook.

This is more than a book launch. It’s the unveiling of a discipline born from hard lessons: why correctness is no longer enough, why trust is now the true currency of AI, and why enterprises need engineered systems rather than clever prompts.

Inside, you’ll find real-world case studies, design patterns, code examples, best practices, field lessons, and anti-patterns — all drawn from years of building agents that don’t just impress on stage but survive in production, integrate across enterprises, and stand up to regulatory audits.

From Software Engineering to Agentic Engineering

I still remember the day a flawless demo turned into a silent failure. The agent had guided a workflow seamlessly in front of stakeholders. It reasoned, retrieved, and acted with fluency that felt almost magical. But in production, it froze. No crash. No error. Just silence.

That moment made something clear: we were not building applications anymore. We were building something fundamentally different.

Software engineering was born in a world of determinism. Code executed exactly as written. Applications followed predictable paths, and when they failed, they failed loudly, with logs and stack traces pointing to the problem. The discipline gave us the rigor to scale the digital world.

Agents are different. They reason probabilistically, adapt under shifting context, and often generate outputs that sound persuasive but lack grounding. They can hallucinate confidently, drift invisibly, or repeat flawed reasoning without leaving a trace. No debugger was built for this.

Correctness, once the gold standard of engineering, is now just the baseline. The real frontier is trust in motion: systems that reason under uncertainty, adapt responsibly, and prove alignment continuously.

This is the essence of Agentic AI Engineering: the discipline that takes us beyond deterministic software toward cognitive systems that can operate at production-grade, enterprise-grade, and regulatory-grade. Just as software engineering emerged decades ago to tame the complexity of code, agentic engineering is emerging now to tame the complexity of cognition.

The lesson from that silent failure was not that the agent was broken. It was that the discipline to build it had not yet been born.

The Agentic Stack: The Blueprint of Agentic Engineering

If software engineering gave us the discipline to manage deterministic code, then agentic engineering requires a new framework to manage cognition. That framework is the Agentic Stack.

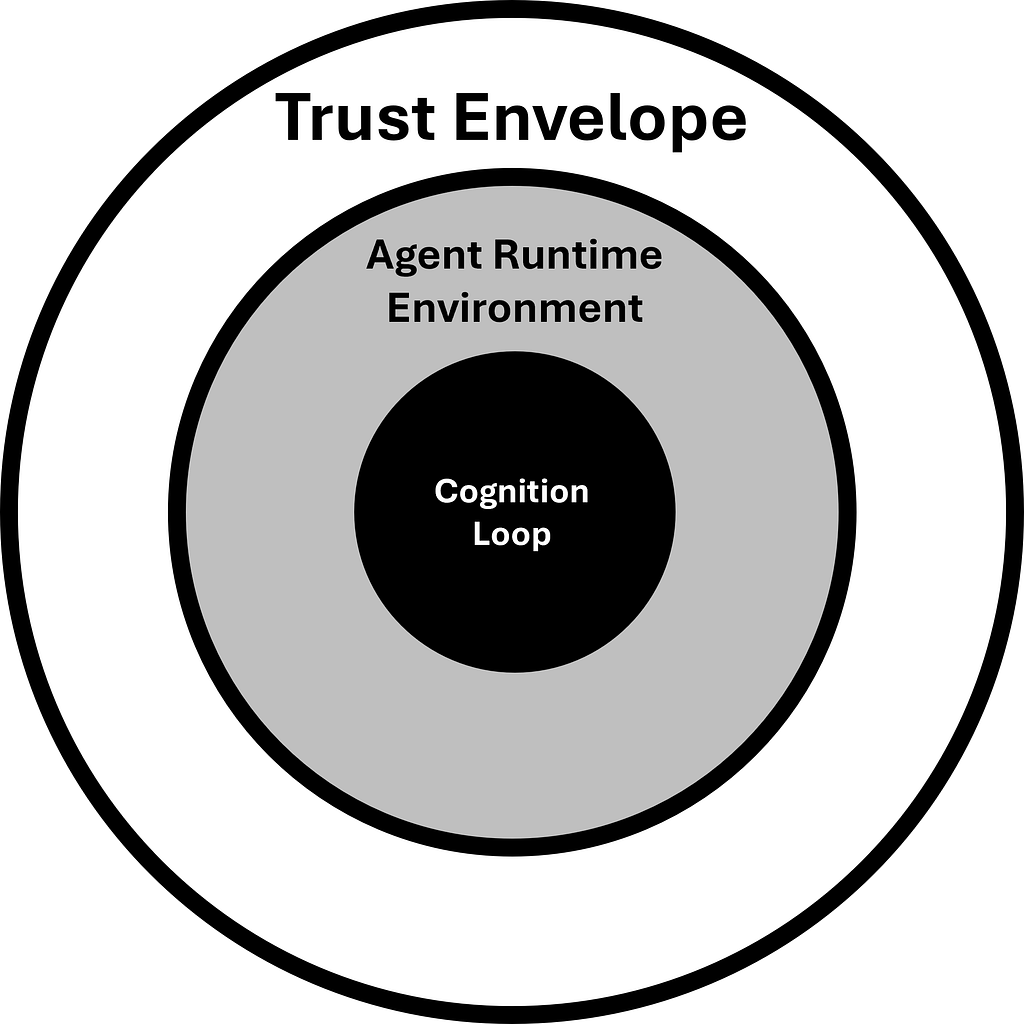

The Stack is built on three layers: the Cognition Loop, the Agent Runtime Environment (ARE), and the Trust Envelope. Together, they transform agents from fragile experiments into resilient, auditable, and trustworthy systems that can withstand real-world demands.

Figure: The Agentic Stack

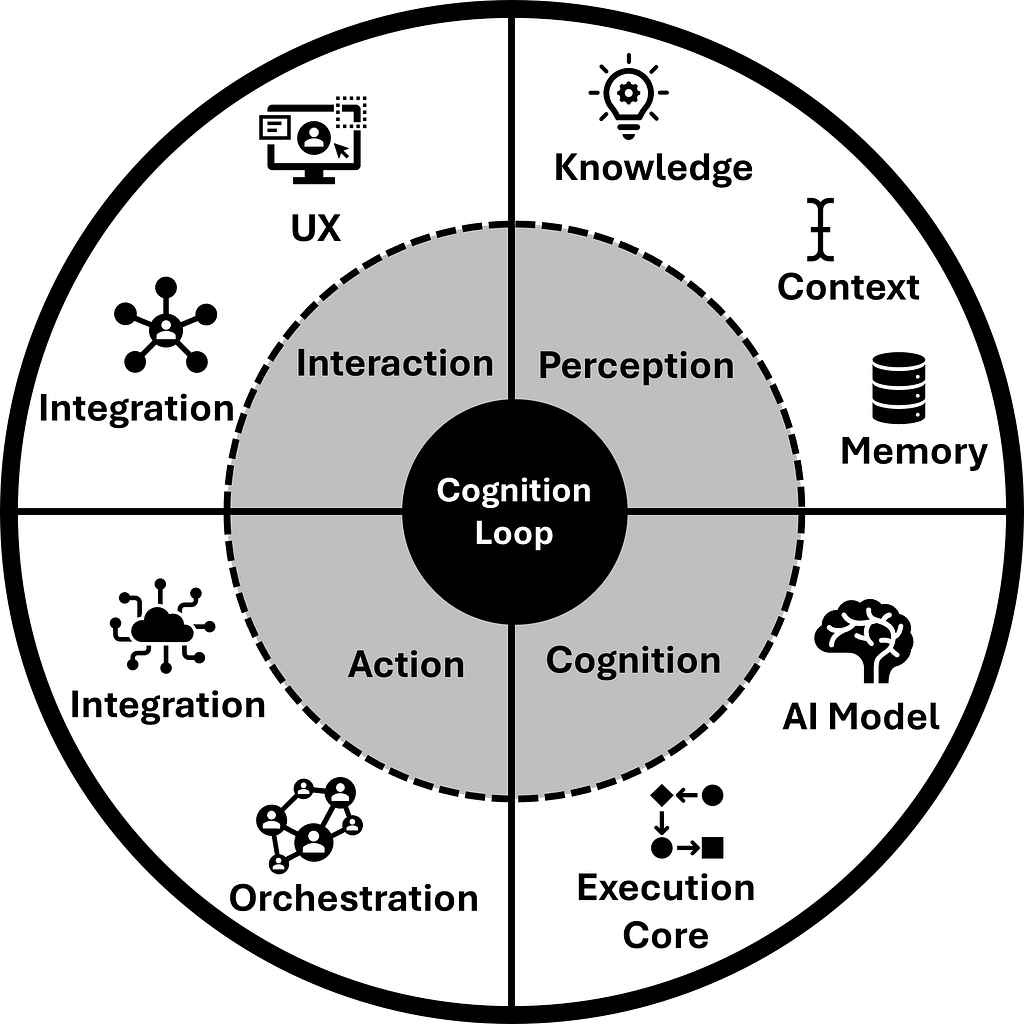

At the center is the Cognition Loop, the cycle of interaction, perception, cognition, action, and reflection. This is where intelligence takes shape. Interaction governs how humans and systems engage. Perception frames the agent’s view of the world through knowledge, context, and memory. Cognition fuses reasoning and learning into adaptive intelligence. Action executes decisions into workflows. Reflection feeds outcomes back into context and memory so that the system can adapt over time.

Figure: The Agentic Cognition Loop

A loop by itself is fragile. Without containment, it drifts, repeats mistakes, or fails silently. The Agent Runtime Environment (ARE) provides that containment. It delivers isolation, reset controls, lifecycle management, and observability. It ensures that every execution is clean, reproducible, and governable, much like an operating system once brought order to early software.

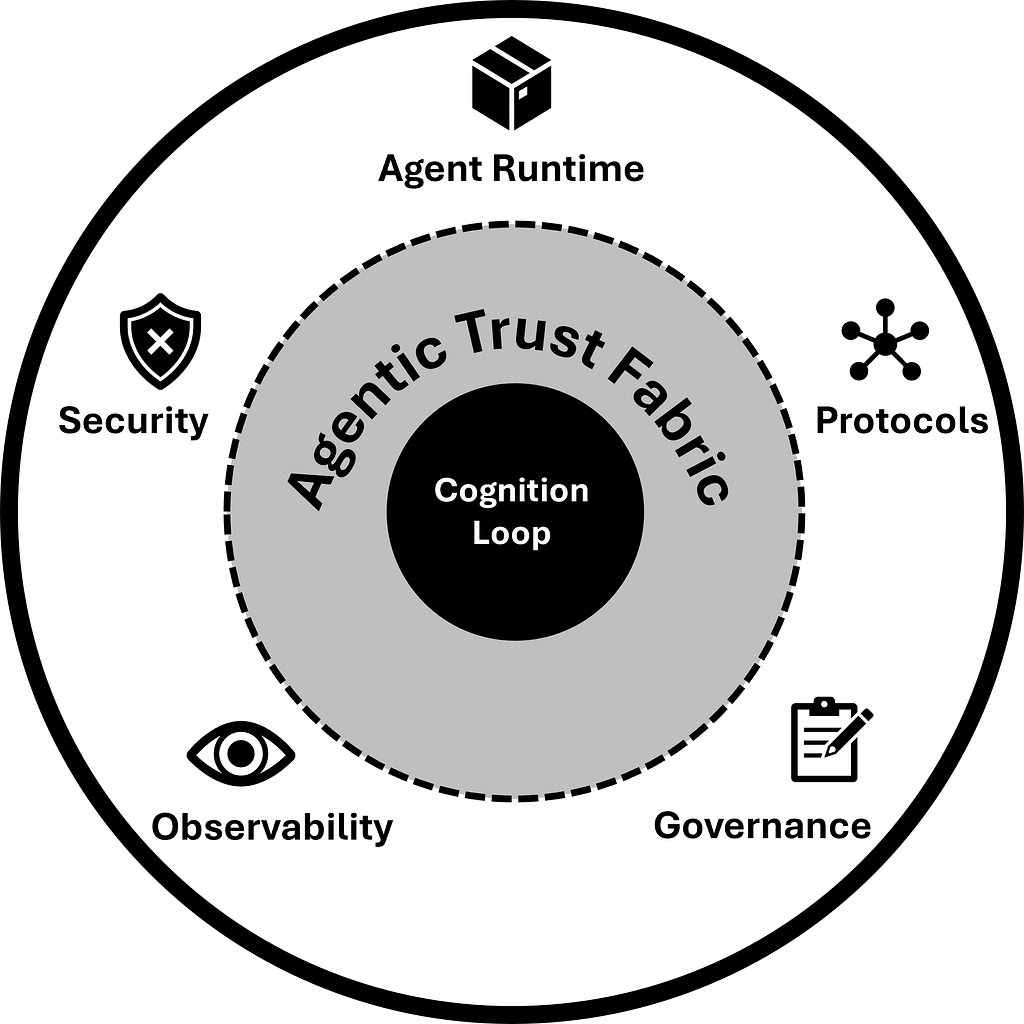

Surrounding both loop and runtime is the Trust Envelope, the integrated control layer of agentic systems. The Trust Envelope unifies security, observability, protocols, and governance into a single adaptive framework. It ensures that trust does not depend on any single safeguard but is reinforced at every boundary, in every loop, and across every context.

Figure: The Agentic Trust Fabric

Together, the ARE and the Trust Envelope form the Agentic Trust Fabric, the living structure that sustains trust in motion. It is this combination of cognition, runtime, and trust that allows agents to progress beyond demos and operate responsibly at scale.

The Agentic Maturity Ladder: The Blueprint for Progress

If the Agentic Stack defines how agents are constructed, the Agentic Maturity Ladder defines how they grow. One provides the architecture of trust. The other charts the path of transformation. Together, they form the roadmap for moving from fragile demos to resilient ecosystems.

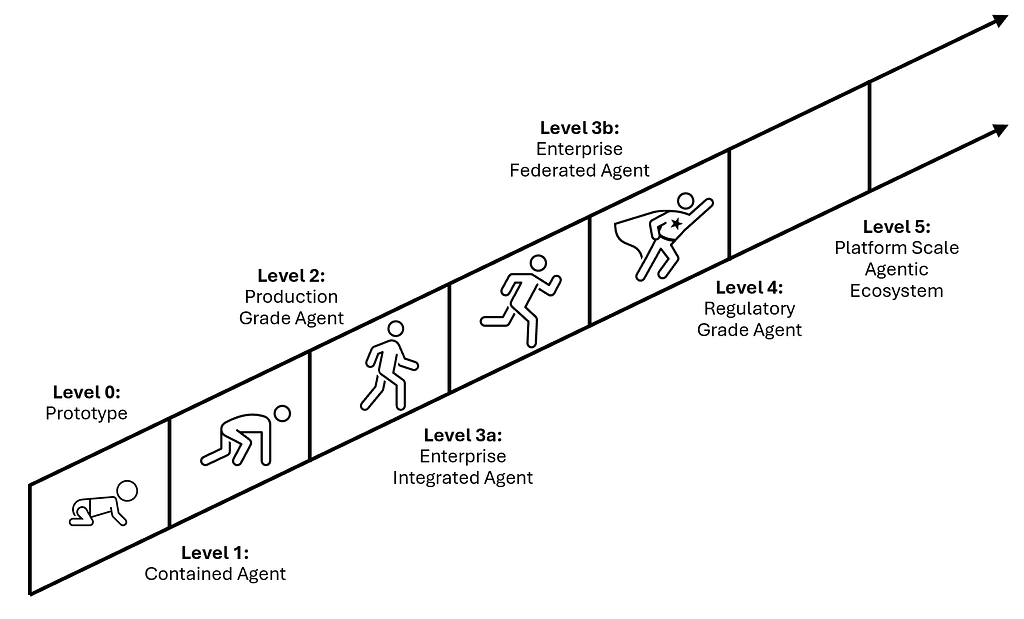

The Maturity Ladder traces six levels of progression, with Level 3 divided into two stages. Each level closes specific failure gaps, introduces new safeguards, and prepares the foundation for the next. Climbing it is not optional. Skipping rungs is not resilience; it is collapse waiting to happen.

Figure: The Agentic Maturity Ladder

L0 Prototype: The Illusion of Working

Agents impress in demos but remain brittle. There is no containment, no trust fabric, and no safeguards. Fluency conceals fragility.

L1 Contained Agent: From Dangerous to Deployable

Containment shells isolate runs, reset state, and enforce boundaries. Agents become safe enough to test without spiraling into silent failure.

L2 Production Grade: The First Real System

Agents gain observability, scoped memory, and dynamic guardrails. They deliver repeatable outcomes and prove they can operate in live environments.

L3a Enterprise-Integrated Agent: Standardization First

Agents connect across departments with consistent interfaces, governed data pipelines, and policy enforcement. Silos begin to dissolve.

L3b Enterprise-Federated Agent: The Networked Layer

Multiple agents coordinate across domains, sharing protocols, observability, and governance overlays. Cognition becomes distributed without fragmenting trust.

L4 Regulatory Grade: Proving, Not Just Running

Agents withstand external audits. Every action is traceable, attributable, and auditable. Compliance is engineered into execution rather than bolted on afterward.

L5 Platform Scale: Trust at Scale

Agents assemble into adaptive ecosystems. Trust fabrics, cross-agent protocols, and meta-orchestration enable intelligence to scale across enterprises and industries.

The Ladder is not theoretical. It reflects the lived reality of organizations that have tried to scale AI without it. At L0, the illusion of progress convinces teams that demos equal destiny. At L1, safety emerges as the first true hurdle. By L2, they learn that production-grade requires discipline, not patches. Levels 3 and 4 mark the leap from pilots to enterprise adoption and from enterprise adoption to regulatory proof. And at L5, the promise of agentic engineering becomes real: ecosystems of intelligence that function as resilient infrastructure.

Where the Agentic Stack defines the architecture of a single system, the Agentic Maturity Ladder defines the journey of the enterprise. Together, they are the twin foundations of agentic engineering.

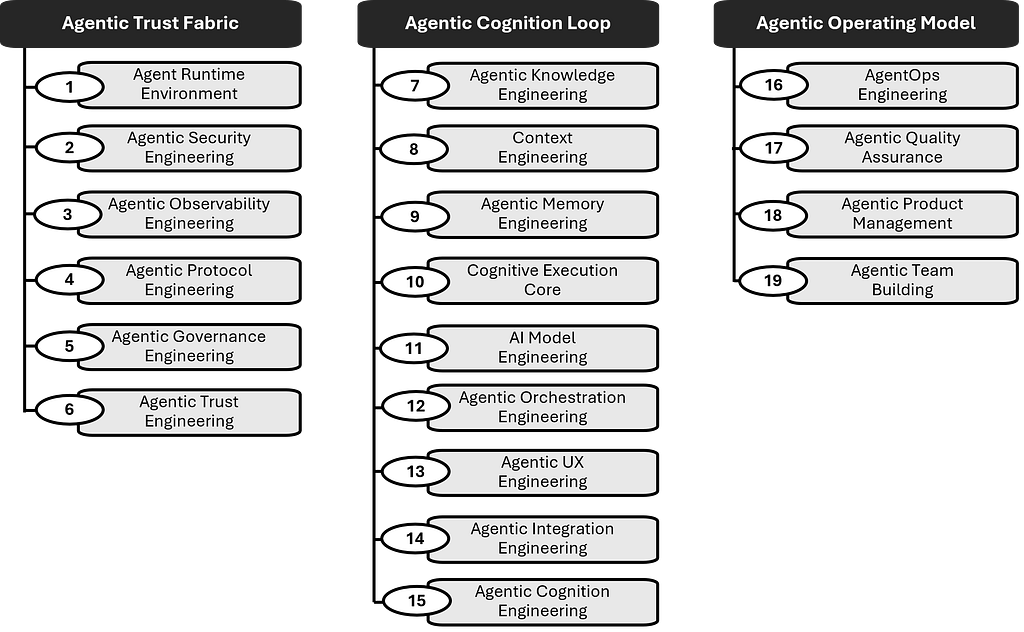

The 19 Engineering Practice Areas: The Blueprint for Discipline

If the Agentic Stack defines the architecture of trust, and the Agentic Maturity Ladder defines the path of growth, the 19 Practice Areas define the discipline itself. They are the engineering foundations that make the Stack real and the Ladder climbable.

The maturity of agentic systems is built on these 19 interconnected practices. Each one addresses some failure modes already observed in the wild. Together, they frame cognition, stabilize intelligence, and ensure that every run begins clean, every action is bounded, and every decision is auditable.

Figure: The Nineteen Agentic Engineering Practice Areas

The 19 agentic engineering practice areas are:

Agent Runtime Environment: containment, isolation, and lifecycle management that give cognition a bounded execution frame.

Agentic Security Engineering: dynamic identity, scoped access, and adaptive policy enforcement that shift as the loop progresses.

Agentic Observability Engineering: policy-bound telemetry that makes every decision and event visible, auditable, and enforceable.

Agentic Protocol Engineering: communication standards that preserve identity, provenance, and policy context across every handoff.

Agentic Governance Engineering: machine-readable rules and policies encoded into execution so that compliance is enforced, not assumed.

Agentic Trust Engineering: the fusion of runtime, security, observability, protocols, and governance into a living control fabric.

Agentic Knowledge Engineering: structured, versioned, and provenance-bound knowledge fabrics that agents can reason over with proof.

Context Engineering: engineered perception through layered, filtered, and routed context so that agents see the right reality at the right time.

Agentic Memory Engineering: governed memory that decides what to retain, when to forget, and how influence is proven over decisions.

Cognitive Execution Core: explicit planning, acting, and reflecting loops that stabilize raw predictions into disciplined reasoning cycles.

AI Model Engineering: the casting of models into defined roles, routing tasks across big, small, tuned, and multimodal models under policy.

Agentic Orchestration Engineering: coordination rules, delegation, arbitration, and governance overlays that align many agents as one system.

Agentic UX Engineering: interfaces that make reasoning visible, steerable, and accountable so autonomy remains transparent and governable.

Agentic Integration Engineering: connections into enterprise systems, APIs, and records so cognition produces durable and executable outcomes.

Agentic Cognition Engineering: unification of knowledge, context, memory, reasoning, models, orchestration, UX, and integration into a closed, adaptive loop.

AgentOps Engineering: the operational discipline of resilience, monitoring, governance, and recovery that makes autonomy reliable in production.

Agentic Quality Assurance: continuous testing, regression, chaos, and probabilistic evaluation pipelines that prove trust in motion.

Agentic Product Management: cognition as product, where trust contracts, ROI, and defensibility replace the old feature-driven roadmap.

Agentic Team Building: new organizational roles and structures that sustain agentic systems, from Agentic PM and AgentOps Leads to Context Engineers and QA.

These practices are not independent checklists. They are interdependent disciplines that reinforce one another. Observability without governance leaves trust incomplete. Memory without context leads to drift. Security without protocols creates brittle silos. By engineering across all nineteen, organizations weave resilience into every layer of their systems.

The power of the practice areas is that they connect the abstract to the actionable. The Agentic Stack shows the architecture. The Agentic Maturity Ladder shows the journey. The 19 Practice Areas show the work required at each step to ensure systems do not collapse.

Why This Book Matters

Agentic AI Engineering is not just another book on artificial intelligence. It is a blueprint for a discipline that most enterprises lack today but urgently need.

The first wave of AI agents proved that intelligence can be generated on demand. The second wave revealed how fragile that intelligence becomes in production. The challenge now is not creating clever demos but building resilient systems. This book delivers the frameworks, maturity models, and engineering practices required to make that leap.

For engineers and architects, it provides technical depth, design patterns, code examples, and best practices to move from improvisation to disciplined engineering.

For product leaders and executives, it offers strategies to align innovation with trust, shaping products that are not just useful but defensible and durable.

For enterprises in regulated industries, it shows how to meet the highest bar: systems that are correct, auditable, governable, and compliant.

The deeper message is that we are living through a transformation in engineering itself. Just as software engineering once emerged to tame the complexity of code, agentic engineering is now emerging to tame the complexity of cognition. This book defines the Agentic Stack, maps the Agentic Maturity Ladder, and details nineteen practice areas that turn intelligence from fragile novelty into enterprise-grade and regulatory-grade infrastructure.

This book matters because the future of AI will not be judged by what agents can say, but by what they can prove, and by whether enterprises can trust them to operate safely and at scale.

Where to Go Next

Reading Agentic AI Engineering is the first step.

Get a print copy on Amazon, or

download the eBook.

The next is applying the discipline in practice. One way to accelerate that journey is through the Agentic Engineering Academy, which prepares leaders, architects, and practitioners to design, govern, and operate AI systems that are production grade, enterprise grade, and regulatory grade.

Leadership Academy helps executives, transformation and product leaders align investments, set roadmaps, and govern responsibly.

Architect Academy enables product, service, solution and enterprise architects to design resilient blueprints that scale and withstand enterprise quality and compliance.

Practitioner Academy equips engineers, QA leads, SREs, and new graduates with hands-on skills to build contained agents, implement trust fabric, and close cognition loops.

Custom Hybrid Programs bring cross-functional teams together with industry-specific workshops for healthcare, finance, biotech, pharma, and other regulated sectors.

Each program concludes with a capstone project and professional certification, providing a tangible signal of readiness to lead in this new discipline.

The future of AI will not be shaped by one-off demos. It will be shaped by organizations that invest in trust, resilience, and scale. The Academy is one accelerator among others that can help teams take that step with confidence.

Learn more at the Agentic Engineering Academy.

New Book: Agentic AI Engineering for Building Production-Grade AI Agents was originally published in Agentic AI & GenAI Revolution on Medium, where people are continuing the conversation by highlighting and responding to this story.