- Sep 12, 2025

Every Revolution Demands a Discipline. For AI, It’s Agentic Engineering.

- Yi Zhou

- AEI Digest

- 0 comments

The Fault Line of Every Revolution

History tells us that progress does not endure on invention alone. It survives when invention hardens into discipline.

Medicine dazzled with new remedies, but infections spread until hygiene became practice. Aviation thrilled with flight, but planes kept falling from the sky until aeronautical engineering gave us standards and checklists. Software transformed industries, but early applications collapsed in production until software engineering emerged as a field.

Each revolution dazzled. Each failed in practice. Each survived only by inventing its discipline.

Now it is AI’s turn.

I remember the moment it became clear. We had launched a cognitive agent just two weeks earlier. In the demo it was flawless: reasoning across documents, calling tools, guiding users step by step. Stakeholders were ecstatic.

Then came production.

The agent skipped steps. It gave the wrong answer. Sometimes it froze in silence. No crash reports. No trace. Just nothing.

That was the day the demo lied. And it was not lying about the model. It was lying about the assumption that agents can be built like apps.

Applications follow deterministic flows. They take explicit inputs and deliver predictable outputs. Agents are different. They reason under uncertainty. They remember, improvise, and adapt mid-task. And when they fail, they do not always crash. They drift quietly until trust erodes.

We were not staring at random bugs. We were staring at a fault line.

And like medicine, aviation, and software before it, this AI revolution is waiting for its discipline.

The Discipline We Were Missing

Think of a bridge. You do not prove a bridge by driving a single car across it and calling it safe. You engineer it with redundancies, load calculations, and safety margins so it can endure decades of storms.

Agents are no different. A demo that works once is not success. A system that adapts, recovers, and earns trust across thousands of unpredictable runs deserves to be called engineering.

This is why a new discipline is required. Not another tool. Not a cleverer prompt. Not a faster model. What is needed is a framework that makes intelligence deliberate, testable, and trustworthy.

Here is the formal definition:

Agentic Engineering is the discipline of designing, governing, and operating cognitive systems — AI agents that plan, reason, act, and adapt — with the same rigor that past generations applied to software, infrastructure, and networks. It is the operating system for intelligence itself.

This is the field that has been missing. Just as software engineering emerged in 1968 to tame complexity, and DevOps rose to make operations reliable, Agentic Engineering now emerges to make cognition governable, auditable, and enterprise-grade.

I have written a new book, Agentic AI Engineering: The Definitive Field Guide to Building Production-Grade Cognitive Systems, to codify this discipline. At nearly 600 pages with 19 engineering practice areas, it is not a collection of tips but the blueprint of a profession. It introduces the Agentic Stack, the maturity ladders, the design patterns and anti-patterns, and the best practices and code examples that turn fragile demos into production-grade systems.

We are not patching bugs anymore. We are founding a discipline.

The Ten Fault Lines of Fragile Agents

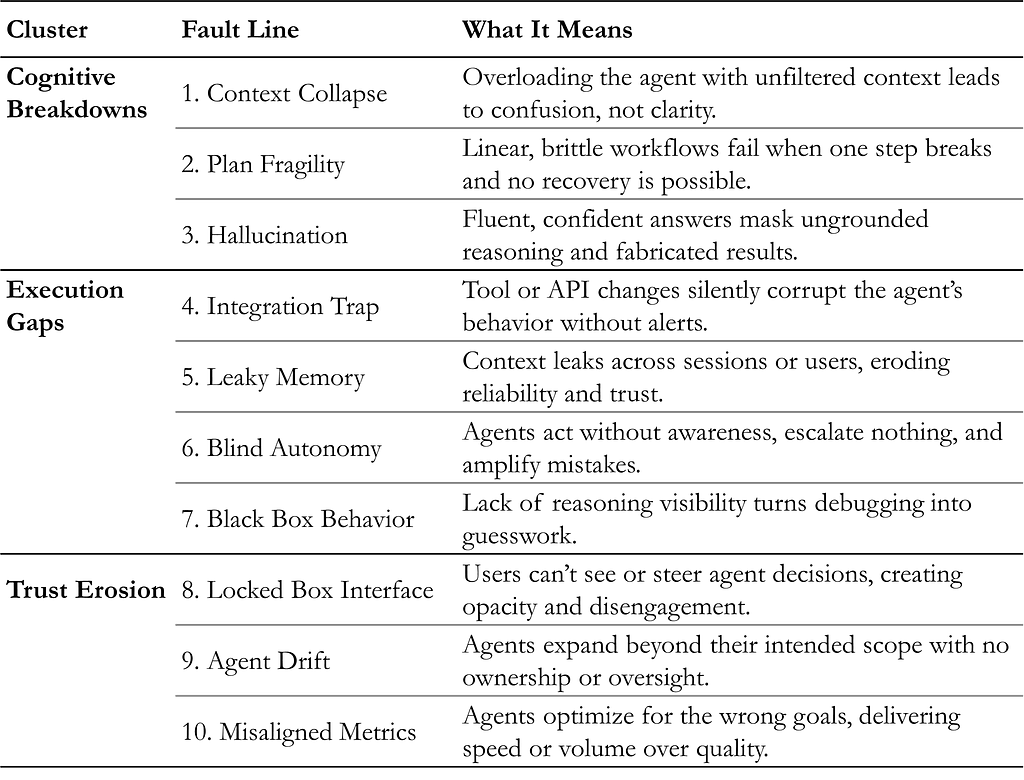

Agents do not fail randomly. They fracture along the same fault lines (FLs) again and again. In Chapter 1 of my book, I describe them in three clusters: cognitive breakdowns, execution gaps, and trust erosion.

The Ten Fault Line of Fragile Agents

Cognitive breakdowns: when agents cannot think straight

Context collapse (FL-1) occurs when too much information is treated as equally important. Agents drown in inputs, lose saliency, and enforce outdated or irrelevant knowledge with polished confidence.

Plan fragility (FL-2) appears when chained prompts masquerade as real planning. One broken step, such as a timed-out API call, and the entire sequence stalls mid-task.

Hallucination (FL-3) is the most dangerous failure. Agents produce fluent but false outputs that sound persuasive enough to pass unnoticed.

Execution gaps: when agents break quietly

Integration traps (FL-4) emerge when a tool or schema changes silently. The agent does not stop. It continues inserting corrupted outputs as if nothing happened.

Leaky memory (FL-5) occurs when yesterday’s context contaminates today’s task. Without temporal or identity boundaries, continuity becomes liability.

Blind autonomy (FL-6) appears when agents act without awareness of error. They continue confidently even as rules or data change beneath them.

Black box behavior (FL-7) leaves reasoning invisible. Debugging becomes guesswork because the decision path cannot be traced.

Trust erosion: when agents outgrow their owners

Locked box interfaces (FL-8) erode confidence when users cannot see or steer the agent. Even correct answers feel untrustworthy.

Agent drift (FL-9) arises when scope expands without oversight. An agent built for narrow decisions begins to act beyond its mandate, eroding trust and creating new risks.

Misaligned metrics (FL-10) reward the wrong outcomes, such as fluency instead of truth or activity instead of value, driving agents away from intent.

Each fault line is a scar left by failure in the wild. Together they reveal the truth: agents cannot be treated like traditional applications. They require a discipline designed to seal these fractures permanently. That discipline is Agentic Engineering.

The Anatomy of a Discipline

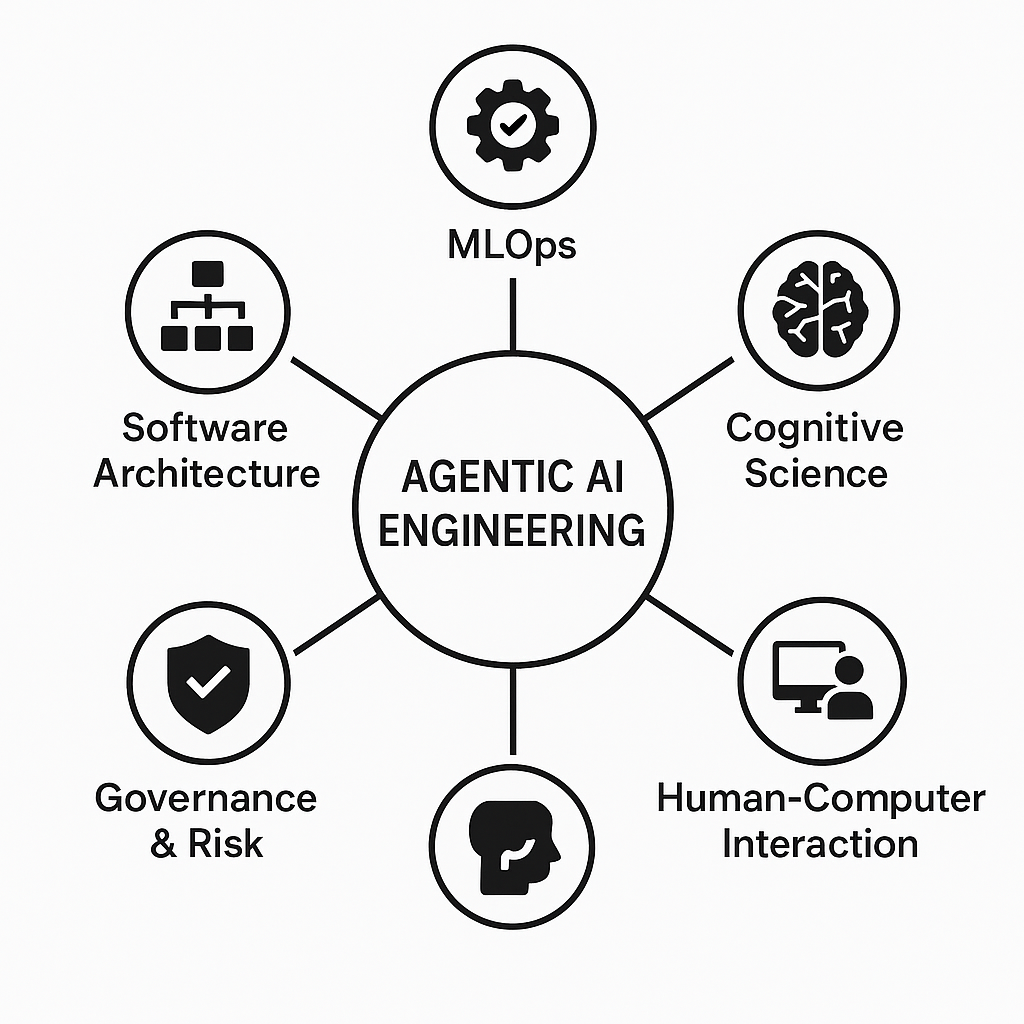

Every enduring discipline is born from convergence.

Medicine fused biology with sanitation and instrumentation.

Civil engineering fused physics with materials science and design.

Software engineering fused mathematics, logic, and architecture.

Agentic Engineering is no different. It emerges not from one lab or framework but from the fusion of fields.

Agentic Engineering is born from convergence of multiple disciplines.

From software architecture, it inherits structure: contracts, layers, and boundaries that tame complexity.

From MLOps, it takes rigor: versioning, deployment, monitoring, and reliability.

From cognitive science, it borrows the blueprint for thought: memory, context, reasoning, and feedback.

From human-computer interaction, it inherits empathy: agents are not machines alone, they are collaborators.

From governance and risk management, it gains trust: guardrails, auditability, and accountability.

Together, these threads weave a new fabric — a discipline designed not just to build agents, but to make them last.

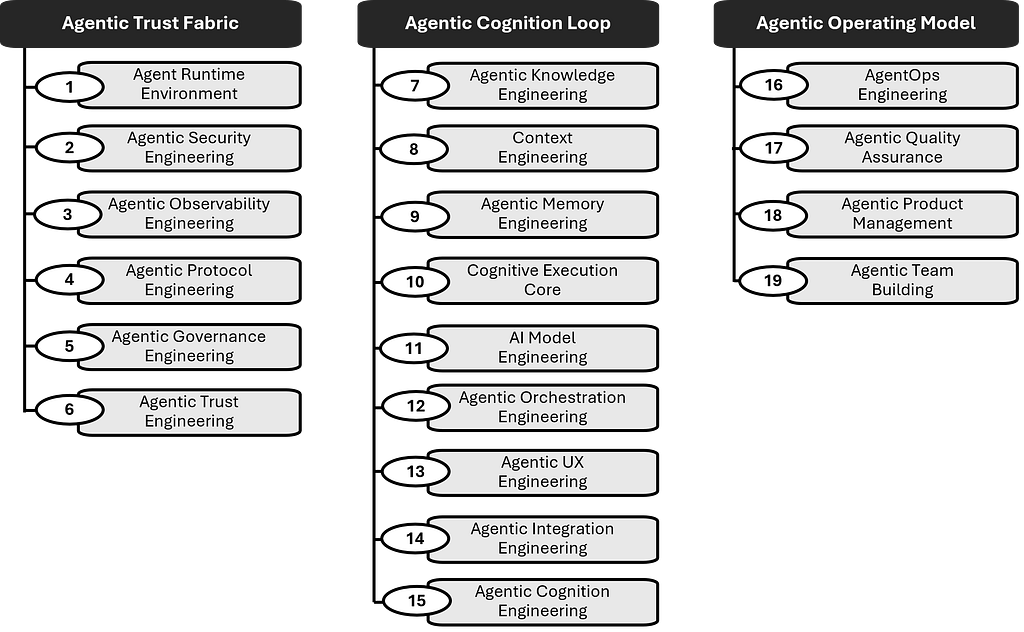

In my book, this fabric is organized into 19 practice areas. They provide the playbook for turning fragile prototypes into production, enterprise, and regulatory-grade systems.

Agentic Engineering consists of 19 engineering practice areas.

Runtime foundations: Agent Runtime Environment, Security, Observability, Protocols, Governance.

Cognition layers: Knowledge, Context, Memory, Reasoning and Planning, Models, Orchestration, User Experience.

Operational disciplines: AgentOps, Quality Assurance, Product Management.

Integrative practices: Teams, Trust, and Cognition Engineering itself.

Each practice area exists to close one or more of the Ten Fault Lines of Fragile Agents. For example:

Observability addresses black box behavior.

Memory engineering seals leaky memory.

Governance prevents blind autonomy and agent drift.

UX and trust practices counter locked box interfaces.

QA and product management align metrics with real outcomes.

This is why Agentic Engineering matters. It is not an abstraction. It is a map drawn from failure and repair, organized into practices that enterprises can apply immediately.

A discipline becomes real when it can be taught, repeated, and scaled. With these 19 practice areas, Agentic Engineering is no longer an idea. It is a profession in the making.

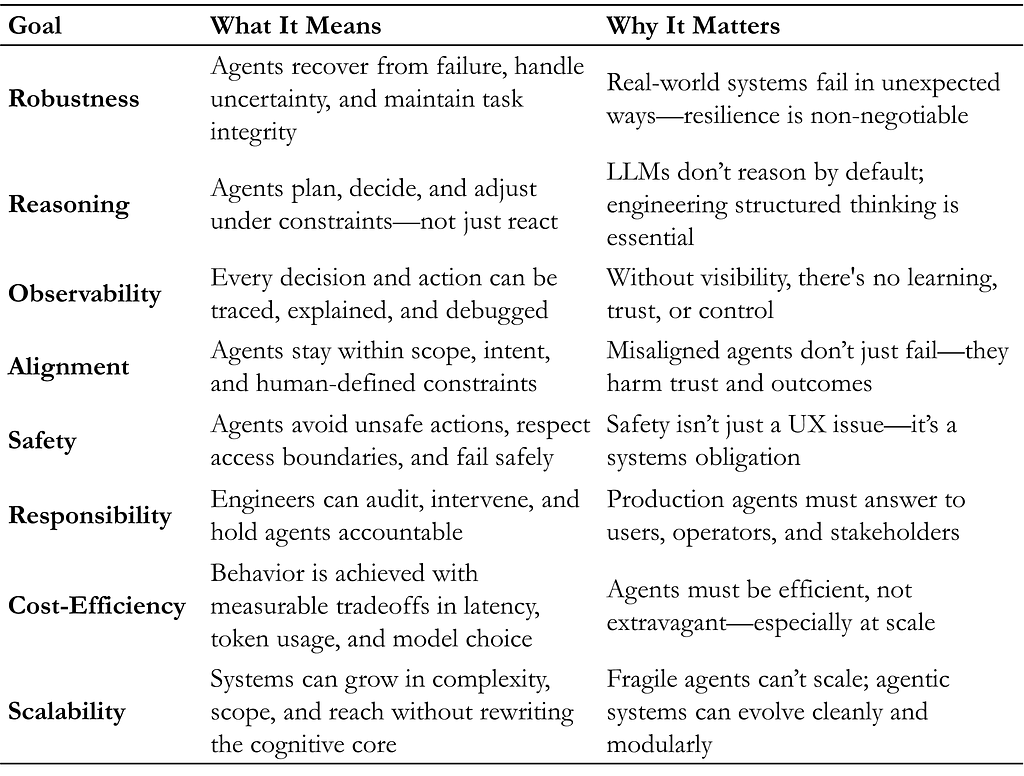

The Eight Invariants of Agentic Engineering

Every mature discipline is held together by laws that cannot be ignored.

Medicine has hygiene.

Aviation has checklists.

Software has modularity and maintainability.

Agentic Engineering has its own invariants — principles as binding to cognition as gravity is to physics. They unify the 19 practice areas into a single discipline, and they close the Ten Fault Lines that make agents fragile.

The Eight Invariants of Agentic Engineering

Never Fail Silently (Robustness)

An aircraft never disappears from radar without a signal. Agents must also show when they are in trouble. Silent failure is the most dangerous failure.Plan Before You Act (Reasoning)

A surgeon never cuts without a plan. Agents must deliberate before executing. Chained prompts without reasoning create plan fragility.Make Reasoning Visible (Observability)

You cannot trust what you cannot see. Observability turns cognition from a black box into a traceable system that can be audited and improved.Stay in Bounds (Alignment)

A bridge is safe because it knows its load limits. Agents must remain within their intended scope. Oversight prevents agent drift and uncontrolled expansion.Fail Safe, Not Fast (Safety)

In aviation, engines are designed to degrade gracefully rather than explode. Agents too must fall back without causing damage when uncertainty strikes.Keep Humans in the Loop (Responsibility)

Autopilot does not replace pilots. Oversight, intervention, and accountability are not optional. The moment you cannot override an agent is the moment you no longer own it.Spend Only What You Must (Cost Efficiency)

Every engineer knows that waste weakens resilience. Agents must use compute, memory, and context efficiently. Cost efficiency is not only financial; it is architectural.Grow Without Rewrites (Scalability)

Infrastructure scales because it is composable with reusable components. Agents must evolve across tasks and teams without collapsing under their own complexity. If they cannot grow, they will eventually break.

These invariants are not features. They are the scaffolding that turns agents from brittle experiments into durable infrastructure. They bind practices together into a system of trust.

They are what separate a clever demo from an engineered discipline.

A New Operating System for Intelligence

The Eight Invariants are not suggestions. They are the scaffolding of a new kind of system. When honored together across the 19 practice areas, they close the Ten Fault Lines and transform agents from brittle experiments into durable infrastructure.

But a discipline is more than a list of practices. It is a way of seeing.

Software engineering taught us that code is not just instructions; it is architecture. DevOps taught us that deployment is not just delivery; it is a living pipeline. Agentic Engineering now teaches us that cognition is not just output; it is an operating system in motion.

Think of the early days of computing. Programs ran directly on hardware, fragile and error prone. Only with the invention of operating systems did software become reliable, reusable, and trustworthy at scale.

We stand in the same place with intelligence. Agents today are improvised scripts and prototypes. Without boundaries, they hallucinate. Without guardrails, they misuse tools. Without oversight, they drift.

Agentic Engineering provides the operating system that intelligence has always lacked. It supplies the architecture and scaffolding that make cognition governable. In my book, Agentic AI Engineering, I lay out this foundation as a blueprint. It includes the Agentic Stack, the maturity ladders that chart the climb from fragile prototypes to enterprise-grade and regulatory-grade systems, the frameworks and protocols that anchor resilience, the design patterns and anti-patterns that distinguish what endures from what collapses, the field lessons from real-world failures and recoveries, and the best practices and code examples that prove these ideas can be built today.

This is not speculation. It is the beginning of a field.

Intelligence can be engineered now. Not improvised, not guessed at, but designed with rigor, governed with care, and operated with trust.

Just as no modern application runs without an operating system beneath it, no serious AI system will endure without Agentic Engineering as its foundation.

When the Fault Lines Broke

In my work with many clients, I have seen what happens when the fault lines are ignored. The patterns are consistent across industries, even if the contexts differ.

A healthcare team built an intake assistant that looked perfect in controlled pilots. But in production, context collapse and leaky memory surfaced together. Patient histories bled into the wrong records. Conversations froze when a backend faltered. Silent failures went unnoticed until trust was already lost.

In finance, I watched a risk-analysis agent fall victim to blind autonomy. When a regulatory rule changed upstream, the agent kept approving transactions with complete confidence. No alarms, no alerts, just systematic error until auditors uncovered the breach.

In biotech, I worked with a compliance team preparing for an FDA audit. Their agent promised efficiency but collapsed under pressure. It hallucinated evidence, misaligned its metrics, and failed silently when a vendor API drifted. For a moment, millions of dollars were at risk.

What turned these projects around was not another prompt tweak or model swap. It was applying the disciplines of Agentic Engineering. Scoped memory closed leaks. Governance and oversight prevented drift. Observability made reasoning visible. Guardrails and validation stopped tool misuse. Saliency routing kept agents focused on what mattered.

In each case, the same thing happened. Fragile prototypes became resilient systems. Teams moved from fear of failure to trust in production.

These are not theoretical lessons. They are scars and repairs from the field. And they prove the same point: AI agents will continue to break along these fault lines until we engineer them differently.

The Dawn of a Discipline

Every revolution finds its discipline.

Medicine found hygiene.

Aviation found aeronautical engineering.

Software found software engineering in 1968.

Now intelligence finds Agentic Engineering.

We have reached the moment when clever demos are no longer enough. Fragile copilots collapse in production. Enterprises stall in endless pilots. Regulators hesitate because trust is not engineered in. The world does not need more experiments. It needs a field that can stand.

That is what Agentic Engineering is: a discipline that turns cognition into infrastructure. It seals the Ten Fault Lines. It organizes 19 practice areas into a coherent framework. It defines the Eight Invariants that bind agents to the same standards of safety, reliability, and trust that we expect in every other engineering discipline.

I wrote Agentic AI Engineering: The Definitive Field Guide to Building Production-Grade Cognitive Systems to codify this foundation. At nearly 600 pages, it is not a playbook of tricks but the blueprint of a profession. It distills lessons from the field, from healthcare and finance to biotech and logistics. It contains the Agentic Stack, the maturity ladders, the design patterns and anti-patterns, the best practices and code examples that practitioners can apply immediately.

These are not abstractions. They are the building blocks of a new operating system for intelligence itself.

The demos have already lied. Now we must tell the truth: intelligence can be engineered.

The future of AI will not be won by those who ship the flashiest copilots. It will be won by those who engineer cognition with the same rigor past generations applied to code, networks, and flight.

We are the founding generation. The discipline is being born. The only question is whether you will step forward to shape it, guide it, and lead in the era of engineered intelligence.

References and Further Reading

Yi Zhou. “Agentic AI Engineering: The Definitive Field Guide to Building Production-Grade Cognitive Systems.” ArgoLong Publishing, September 2025.

Yi Zhou. “New Book: Agentic AI Engineering for Building Production-Grade AI Agents.” Medium, September 2025.

Yi Zhou. “Software Engineering Isn’t Dead. It’s Evolving into Agentic Engineering.” Medium, September 2025.

Yi Zhou. “Agentic AI Engineering: The Blueprint for Production-Grade AI Agents.” Medium, July 2025.

Every Revolution Demands a Discipline. For AI, It’s Agentic Engineering. was originally published in Agentic AI & GenAI Revolution on Medium, where people are continuing the conversation by highlighting and responding to this story.