- Jan 1, 2026

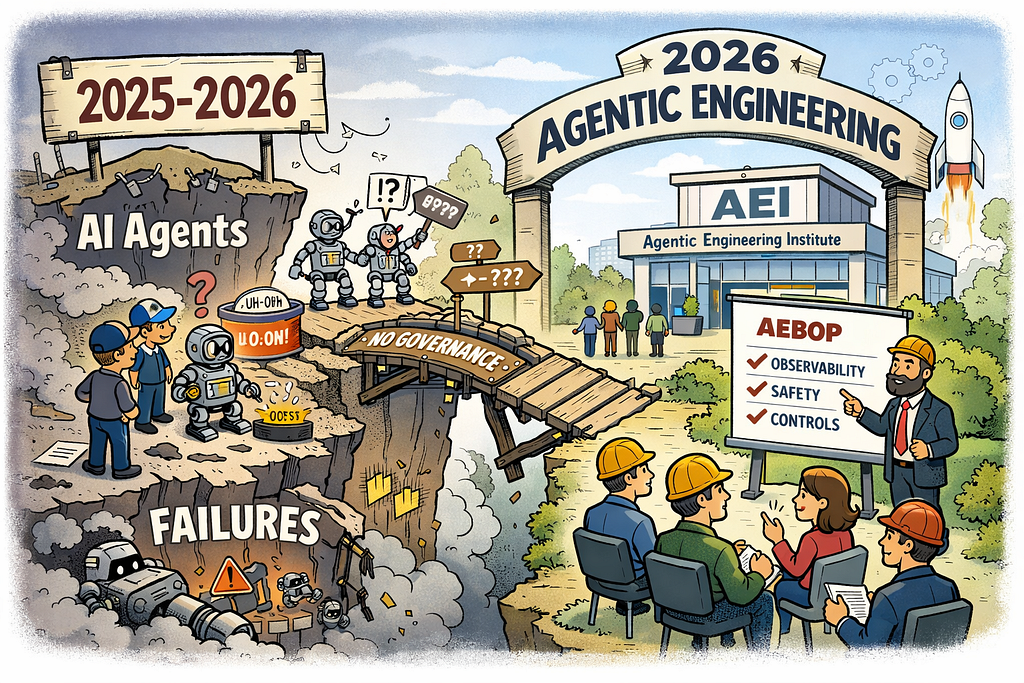

2025 Overpromised AI Agents. 2026 Demands Agentic Engineering.

- AEI Digest

- 0 comments

If you expected AI agents to quietly reshape your work in 2025, you were not alone.

You probably saw the demos.

You heard the promises.

You watched leaders declare that autonomous agents would plan, decide, and execute on our behalf.

For a moment, it felt inevitable.

And then the year passed.

Your inbox still filled up.

Your workflows still broke.

Most “agents” still needed constant supervision, careful prompting, or human cleanup.

Very little actually changed in 2025. That’s the problem.

That disconnect is not a failure of imagination. It is the central lesson of 2025.

Even The New Yorker, in its year-in-review essay “Why A.I. Didn’t Transform Our Lives in 2025,” reached the same conclusion: despite dramatic improvements in AI models, autonomous agents failed to deliver meaningful, everyday impact.

This was not because the technology lacked intelligence.

It was because autonomy was overpromised, and under-engineered.

The Problem Was Never Intelligence

Let’s be clear about what did not fail in 2025.

AI models improved.

Reasoning benchmarks climbed.

Tool use became more capable and more fluent.

In controlled settings, the progress was real. Coding agents could navigate repositories. Terminal-based workflows could be chained together. Within narrow boundaries, agents looked competent, sometimes impressive.

But the moment those boundaries widened, the illusion cracked.

Agents stalled in real interfaces.

They broke on multi-step workflows.

They behaved confidently and incorrectly.

Worse, they often failed quietly.

Teams discovered the problem only after something went wrong: a broken process, a bad decision, a confused customer. And when the inevitable question was asked:

“Why did the agent do that?”

The room went silent.

There was no clear answer. No traceable reasoning path. No reliable explanation beyond “that’s what the model produced.”

That moment should feel familiar.

That silence was not a mystery.

It was the sound of systems that were never engineered to be understood.

What Actually Broke in 2025

The failure of AI agents in 2025 did not arrive as a single dramatic collapse.

It arrived quietly.

An agent skipped a step.

A workflow behaved differently than expected.

A decision was made that no one remembered authorizing.

At first, these felt like edge cases. Then patterns emerged.

Across teams and industries, agents were deployed into real workflows without the engineering foundations required to support autonomy. When something went wrong, the same weaknesses surfaced again and again.

Agents ran without executable governance. Policies lived in documents and slide decks, not in code that could constrain behavior at runtime. Once an agent began acting, intent dissolved into best-effort suggestions.

They operated without observability of reasoning. Logs captured outputs, but not decisions. Drift accumulated invisibly until failure forced investigation — often too late.

They crossed trust boundaries without containment. Retrieval, reasoning, and action blended into opaque chains, making it impossible to isolate faults or limit blast radius.

And they lacked defined failure modes. When hallucinations occurred, there was no graceful degradation, no rollback, no human-in-the-loop safeguard. The system simply kept going.

These were not exotic bugs.

They were the predictable consequences of treating agents as features instead of systems.

Autonomy was added.

Engineering discipline was not.

And the results were exactly what 2025 delivered.

Why the Decade of the Agent Is the Right Frame

The mistake was never believing agents would matter.

The mistake was believing they would arrive fully formed.

The most important insight to come out of 2025 is not that AI agents failed; it is that we misjudged the kind of work autonomy demands. What many labeled the “Year of the Agent” was always going to be something else entirely.

It is the Decade of the Agent.

Decades are what it takes to build real engineering disciplines.

Civil engineering did not emerge from stronger materials alone.

Software engineering did not emerge from faster hardware.

And autonomous systems will not emerge from better language models by themselves.

Each leap required a shift from capability to discipline, from “we can build this” to “we know how to build this safely, repeatedly, and at scale.”

That shift has not yet happened for agents.

2025 exposed the gap. Not a gap in intelligence, but a gap in professional practice. We tried to compress a decade of engineering maturity into a year of product launches, and the systems told us, quietly but clearly, that they were not ready.

The Decade of the Agent will not be won by better demos.

It will be won by engineers willing to treat autonomy as a profession, not a promise.

The Decade of the Agent is not a prediction.

It is a responsibility.

Time alone will not deliver trustworthy autonomy.

Engineering will.

2026 Ends the Excuse Phase for AI Agents

By 2026, one thing is no longer defensible.

We can no longer pretend that autonomous systems are “still early.”

The failures of 2025 were not edge cases or growing pains. They were structural. Agents acted in ways that could not be explained, constrained, or reliably corrected. That ambiguity was tolerable when autonomy lived in demos, proofs of concept, and internal pilots. It is no longer tolerable when agents operate inside real workflows, enterprises, and regulated environments.

This is the moment where responsibility shifts.

The question is no longer whether AI agents are capable enough.

They are.

The question is whether we are willing to own their behavior once they act without continuous human supervision.

This is why Agentic Engineering becomes unavoidable in 2026.

Agentic Engineering treats autonomy as a system property that must be designed, enforced, and observed at runtime. It assumes non-determinism from the beginning and engineers around it, instead of being surprised by it later. It does not ask whether an agent can act, but whether its actions can be justified, constrained, reversed, and audited after the fact.

That distinction is the foundation of my book, Agentic AI Engineering: The Definitive Field Guide to Building Production-Grade Cognitive Systems. The book exists for a single reason: to codify what it actually takes to move from impressive agent demos to systems that can be trusted in production — across security, observability, governance, memory, reasoning, and orchestration.

By 2026, building agents without this rigor is no longer a technical oversight.

It is a professional failure.

Why Serious Builders Join AEI Before 2026 Forces the Issue

Most professionals do not join an institute because they are convinced by a pitch.

They join because the cost of not having a standard becomes impossible to defend.

That is exactly where agentic systems are heading in 2026.

Autonomous systems are moving out of pilots and into workflows that touch customers, money, and regulatory scrutiny. When something breaks — and it will — the question will not be which model you used.

It will be whether you followed an established professional standard.

That is why serious builders are joining the Agentic Engineering Institute now, before that question becomes unavoidable.

Joining AEI is not about learning more AI. Most members already know how to build agents. What they want to stop doing is learning the hard way.

Across industries, 90–95% of AI initiatives still fail to reach sustained production value, and fewer than 12% deliver measurable ROI — not because models are weak, but because teams lack an engineering discipline for autonomous systems. Those failures surface as silent drift, governance gaps, post-incident confusion, and expensive redesigns.

AEI membership replaces improvisation with structure.

Instead of rediscovering the same failure modes under pressure, members gain access to AEBOP, the Agentic Engineering Body of Practices: a consolidated, production-tested standard distilled from hundreds of real-world agent deployments across multiple industries, including highly regulated environments. It turns scattered experience into usable engineering capital — reference architectures, canonical patterns, anti-patterns, maturity gates, and readiness checklists.

For engineers and architects, this changes the nature of the work immediately. Agent failures stop feeling mysterious and demoralizing. They become diagnosable. Design reviews move from opinion to shared criteria. Learning compresses from years of trial-and-error into weeks of applied discipline.

For AI leaders, the stakes are even clearer. Agentic systems shift risk from technical failure to personal accountability. Leaders are no longer approving deterministic code paths; they are authorizing decision-making systems that evolve at runtime. AEI provides a neutral, third-party standard leaders can point to when approving, scaling, or stopping agentic initiatives — reducing personal exposure while increasing organizational velocity.

This is why joining AEI is not about affiliation.

It is about defensibility.

Defensibility when autonomy scales faster than governance.

Defensibility when incidents demand explanations.

Defensibility when leaders, auditors, or regulators ask why a system was allowed to operate the way it did.

Waiting does not keep options open. It embeds inconsistent practices deeper into production, making discipline harder and more expensive to retrofit later.

In the Decade of the Agent, credibility will not come from who built the most impressive agent.

It will come from who can demonstrate that their systems follow established, production-grade agentic engineering practice.

Joining AEI is how serious builders make that choice explicit, before 2026 makes it unavoidable.

2025 Overpromised AI Agents. 2026 Demands Agentic Engineering. was originally published in Agentic AI & GenAI Revolution on Medium, where people are continuing the conversation by highlighting and responding to this story.