- Oct 20, 2025

OpenAI Cofounder Warned of an AI Agent Crisis — Agentic Engineering Is the Way Forward.

- AEI Digest

- 0 comments

OpenAI Cofounder Warned of an AI Agent Crisis — Agentic Engineering Is the Way Forward.

1. The Warning We Can’t Ignore

A decade.

That’s how long Andrej Karpathy believes it will take before AI agents truly work. In his recent Business Insider interview, the OpenAI founding member and Tesla Autopilot visionary didn’t mince words:

“AI agents just don’t work yet. They’re not multimodal enough, they don’t remember, they can’t plan — and it’ll take about a decade before they actually work.”

It was less a criticism than a confession from someone who helped build the machine itself.

And he’s right — to a point. Today’s AI agents are astonishing in bursts and brittle in practice. They forget what they were doing, confuse instructions, and stumble through tasks that require context or continuity. Memory leaks. Reliability collapses.

I’ve seen this firsthand advising global enterprises chasing “digital coworkers.” The pattern is the same: dazzling demos, disappointing deployment.

So yes — I agree with Karpathy’s realism. We are far from the era of fully autonomous, trustworthy agents.

But here’s where I disagree — or more precisely, where I challenge his assumption.

Karpathy implies that because current agents are unreliable, we must wait for smarter AI models before they become viable. That assumption is understandable. But it’s also dangerously incomplete.

We don’t need to wait for more capable intelligence.

We need to engineer more capable systems around it.

Waiting for better models is like the early aviators waiting for better physics before attempting flight. The Wright brothers didn’t wait — they engineered around instability. They didn’t demand smarter air; they designed smarter wings.

The same principle applies here. The problem isn’t intelligence itself; it’s how we integrate it, constrain it, and collaborate with it.

That is the central message of my new book, Agentic AI Engineering, which has just been released. It defines a new discipline for this moment — one where humans and AI systems co-operate through structure, feedback, and governance.

Because this isn’t just an upgrade to software development.

It’s the birth of the next great engineering discipline:

Agentic Engineering — the craft of building, governing, and partnering with intelligent systems.

If we fail to shape this discipline, the decade Karpathy predicts won’t just delay progress — it will define whether humans remain in control of intelligence at all.

2. The Turning Point — and the Hidden Assumption We Must Challenge

History repeats itself — but the stakes grow higher each time.

In 1968, the world faced a crisis of its own making. Software projects were collapsing under complexity. Systems crashed. Costs exploded. The term “software engineering” was coined not to describe a technology, but to enforce discipline on an unruly new form of creation.

That moment saved the digital revolution. We learned that code could not scale without structure — and discipline could turn chaos into civilization.

Today, we stand in the sequel. This time, it’s not software that’s collapsing — it’s intelligence.

We are training systems that can reason, plan, and decide. But we’re still managing them with software-era tools: linear pipelines, brittle APIs, and one-shot prompts. We’ve wrapped frontier models in thin shells and called them “agents.”

Then we wonder why they fall apart.

And here lies the hidden assumption embedded in Karpathy’s warning:

“If AI agents aren’t reliable yet, there’s nothing more we can do until AI itself gets better.”

That assumption sounds logical. It’s also fatal.

Because engineering has never been about waiting for perfection — it’s about designing around imperfection.

The Wright brothers didn’t wait for aerodynamics textbooks. They experimented, failed, adjusted, and built feedback into the wings themselves. The same principle must now guide how we design intelligent systems.

The problem isn’t that our models can’t think — it’s that our systems don’t orchestrate that thinking.

What we need isn’t bigger or smarter models; it’s a new engineering discipline that integrates human judgment, cognitive oversight, and ethical control into every loop of machine reasoning.

That discipline is Agentic Engineering.

If Software Engineering was the operating system for the digital age, Agentic Engineering is the governance system for the intelligence age. It defines how humans and AI share cognition, memory, and responsibility. It ensures that autonomy never outruns accountability.

My book Agentic AI Engineering lays out this blueprint, and the soon-to-launch Agentic Engineering Institute (AEI) will train the first generation of practitioners — engineers, architects, and leaders who will build intelligent systems that don’t just work, but behave reliablely and responsibly.

Because waiting for intelligence to mature is not strategy — it’s surrender. The real question is whether we will have the discipline to engineer how intelligence behaves before it decides for itself.

3. The AI Agent Crisis — Why Karpathy Was Right

Andrej Karpathy didn’t sound bitter when he spoke to Business Insider — he sounded tired. Not from pessimism, but from proximity. Few people have built as close to the AI frontier as he has. When he says, “AI agents just don’t work yet,” he’s not exaggerating — he’s summarizing a truth every serious practitioner feels but rarely admits.

We are living through the AI Agent Crisis — a gap not of ambition, but of architecture. Every breakthrough model promises agency; every deployment exposes fragility. The symptoms are everywhere: hallucinated reasoning, broken memories, forgotten goals, infinite loops. What begins as intelligence ends as noise.

Let’s unpack why Karpathy is right — and where we go next.

A. Memory: The Mirage of Continuity

Karpathy’s first frustration is one every builder knows: AI doesn’t remember. Each new session starts from zero. Context fades, instructions vanish, identity resets. What should feel like a partnership instead feels like amnesia.

Large models can recall information within a conversation, but they don’t yet own persistent memory — not one that survives across time, context, and interaction. Without memory, there can be no trust.

Agentic Engineering fixes this not with more tokens or larger context windows, but with structured memory systems — external, modular components that record, retrieve, and reason over state. Memory becomes explicit, not emergent; verifiable, not fragile.

In an agentic system, memory is engineered the same way databases were once engineered for software — with schemas, permissions, and feedback loops. AI remembers what it should, when it should, under human governance.

B. Planning: Intelligence Without Strategy

Karpathy is also right that today’s agents can’t plan. They can generate steps but don’t understand sequencing, dependencies, or constraints. Ask an agent to book a trip, design a workflow, or execute a multi-step research task, and it will often get lost in its own logic.

Why? Because we’ve mistaken improvisation for planning.

Agentic Engineering treats planning as an orchestrated cognitive layer, not a side effect of reasoning. It separates what must be done from how it is done — integrating human oversight where the system is most fallible.

In an agentic workflow, a Planner Agent proposes a strategy, a Verifier Agent evaluates feasibility, and a Human Architect supervises before execution. This isn’t micromanagement; it’s engineered governance — the same principle that makes air traffic control essential to aviation.

Good planning in AI doesn’t come from more intelligence — it comes from disciplined orchestration.

C. Reliability: The Fragility Problem

Finally, Karpathy’s most important point: reliability. He doesn’t say agents are stupid; he says they’re unreliable. Sometimes brilliant, sometimes useless, often unpredictable — a roller coaster of capability.

Enterprises don’t fail because agents are uncreative. They fail because agents are untrustworthy. A system that works nine times out of ten but fails invisibly the tenth time isn’t usable — it’s dangerous.

Agentic Engineering solves reliability the way Software Engineering solved uptime: through verification, feedback, and redundancy.

Every action is logged.

Every decision is traceable.

Every output is verifiable by design — either by another agent or a human supervisor.

Reliability becomes a property of the system, not the model.

Just as unit testing and continuous integration made software dependable, agentic verification loops make AI agents operationally safe.

The Diagnosis Is Correct — The Assumption Is Not

So yes — Karpathy is right.

AI agents don’t yet remember, plan, or behave reliably enough to operate autonomously. But that’s not because intelligence itself is broken — it’s because we’ve mistaken intelligence for engineering.

The fix won’t come from waiting for smarter models. It will come from architecting smarter systems — systems that embed human judgment, auditability, and feedback as core design principles.

That’s the promise of Agentic Engineering — a discipline built to address precisely the failures Karpathy named.

It doesn’t deny his decade-long prediction; it accelerates through it. Because when you stop waiting for perfection and start engineering around imperfection, progress compounds.

4. What Agentic Engineering Really Is

If Software Engineering was born to manage code, Agentic Engineering is born to manage cognition. It is the discipline that transforms artificial intelligence from a raw capability into a governed collaborator.

For the past decade, we’ve designed systems that use AI — what comes next are systems that coexist with it. That shift demands more than new tools; it demands a new discipline.

A. The Definition

Agentic Engineering is the structured design and governance of systems where humans and AI agents collaborate through enforced rules or policies, feedback, verification, and shared cognition.

It treats agency as an engineering property — something that must be architected, monitored, and improved over time.

Where Software Engineering focused on code correctness, Agentic Engineering focuses on cognitive correctness — ensuring that an AI system’s decisions remain transparent, aligned, and reversible.

It’s the art and science of making intelligence governable.

B. The Foundational Pillars

Like Software Engineering had modularity, testing, and version control, Agentic Engineering has its own five foundational pillars — the principles that will define the next half-century of AI practice.

(1) Hybrid Intelligence Workflows

Humans and AI operate as partners, not replacements. The system is designed so that AI executes, proposes, and analyzes — while humans contextualize, prioritize, and verify. The collaboration itself is engineered, not improvised.

(2) Composable Agent Architectures

No single “super-agent” can do everything. Agentic systems use orchestration layers of specialized sub-agents — planners, executors, verifiers, memory agents, skeptics — each with clear roles and boundaries. This modularity brings the same stability to AI that componentization brought to software.

(3) Human-in-the-Loop Protocols

Human oversight isn’t an afterthought — it’s a design requirement. Agentic systems include escalation channels where AI can pause, request input, or transfer control to a human reviewer. The AI learns when to defer, not just how to decide.

(4) The Trust Fabric

Every intelligent system needs observability, explainability, and auditability. Agentic Engineering introduces a trust fabric — a layer of instrumentation that records reasoning paths, decisions, and justifications.

Just as logs and version control made software maintainable, trust fabrics make cognition accountable.

(5) Ethical and Operational Governance

Agentic Engineering extends engineering ethics into the realm of machine autonomy. It defines who approves, who supervises, and who is responsible when AI acts. It creates traceable lines of accountability between algorithmic choice and human intention.

C. A New Engineering Mindset

Agentic Engineering represents a complete mental shift:

From building tools to designing collaborators.

From automation to orchestration.

From intelligence as capability to intelligence as responsibility.

It acknowledges that AI’s power isn’t dangerous because it’s smart — it’s dangerous because it’s unstructured. The solution isn’t to limit intelligence but to govern it through engineering.

This is why Agentic Engineering is not “software engineering 2.0.” It’s the successor discipline — the next rung in humanity’s relationship with intelligence.

D. The Blueprint for the Next Century

The newly released Agentic AI Engineering book outlines this foundation in detail — 24 chapters across 19 practice areas, from agentic architecture to governance, reliability, memory design, and cognitive safety. It’s the first formal canon of the discipline.

I founded the Agentic Engineering Institute (AEI) to institutionalize this vision — a global academy, certification ecosystem, and knowledge network empowering Agentic Engineers, Architects, and Leaders to design the next generation of human-AI systems.

Because this isn’t just about making AI useful. It’s about ensuring humanity remains the engineer of intelligence, not merely its beneficiary — or its casualty.

5. Case Studies — How Agentic Engineering Transforms Regulated Industries

Agentic Engineering isn’t theory; it’s a practice I’ve applied inside boardrooms, compliance committees, and research labs. Working alongside executives and engineering leaders in healthcare, financial services, and life sciences, I’ve seen how fragile autonomy becomes reliable once you apply structure, feedback, and governance.

Here are three transformations that show the discipline at work.

A. Healthcare — Turning Automation Anxiety into Clinical-Grade Reliability

When I began advising a global diagnostics company, its “autonomous claims agent” had become a compliance risk — misreading payer rules and frustrating staff.

We reframed the problem through Agentic Engineering principles:

A Dialogue Agent managed structured intake.

A Knowledge Agent drew only from verified payer APIs.

A Human Adjudicator Portal surfaced low-confidence cases for review.

A Trust Fabric traced reasoning paths for HIPAA-grade auditability.

Within six months:

Claim-resolution time improved by roughly 30 %,

First-pass adjudication accuracy rose from the mid-70s to about 87 %,

Compliance alerts dropped to zero.

Clinicians began describing the system as “a dependable partner.” Agentic Engineering turned automation into accountability.

B. Financial Services — From Model Risk to Cognitive Compliance

At a Fortune 500 bank, a generative-AI credit-review pilot stalled after regulators questioned explainability. Working with the firm’s risk and engineering teams, we built a governed-cognition stack:

A Planner Agent broke reviews into explainable subtasks.

A Verifier Agent cross-checked results against credit policy.

A Human Oversight Board reviewed exceptions.

Every decision was logged in an Agentic Ledger aligned with SR 11–7 controls.

Over nine months, the bank restored regulator confidence, increased review throughput by about 70 %, and achieved continuous audit readiness. The same compliance officers who once blocked deployment became its champions — because oversight was now engineered in, not layered on.

C. Life Sciences — From Data Noise to Discovery Acceleration

In a biotech research group, autonomous analysis agents were producing misleading gene-expression hypotheses. Together with the R&D team, we redesigned the workflow as an agentic debate system:

A Hypothesis Agent proposed findings.

A Skeptic Agent stress-tested them statistically.

A Memory Agent tracked rejected hypotheses for learning.

A Human Scientist-in-the-Loop validated outputs before experiments.

After nine months:

Validated-hypothesis accuracy rose from 61 % to ≈ 82 %,

Research cycles shortened about 25 %,

Reproducibility in peer review nearly doubled.

Agentic Engineering re-embedded the scientific method inside machine cognition — transforming AI from a noisy assistant into a disciplined collaborator.

Across Industries, One Lesson

Everywhere I’ve worked with AI systems, one truth repeats:

Autonomy fails where architecture is absent.

Agentic Engineering supplies that architecture — the scaffolding that makes intelligence accountable.

Healthcare proved reliability can be engineered.

Finance proved governance can move as fast as innovation.

Life Sciences proved curiosity needs structure to create truth.

These engagements form the early evidence of a larger transformation: the moment when intelligence stops being a tool and becomes a partner — because we finally learned how to engineer the relationship itself.

6. Beyond AGI and ASI — Why Humanity Still Needs Agentic Engineering

The deeper we advance into the age of intelligence, the more we confront a paradox:

the smarter our machines become, the more essential engineering discipline becomes.

Many technologists assume that once we reach Artificial General Intelligence (AGI) or Artificial Superintelligence (ASI), governance will be obsolete — that intelligence will manage itself.

It’s the same illusion that underlies today’s fragile AI agents: that autonomy is self-stabilizing.

It isn’t.

A. The Illusion of Self-Regulating Intelligence

Andrej Karpathy predicted that it may take a decade before AI agents “actually work.” He’s probably right about that timeline. But even when they do work — when they plan, reason, and execute flawlessly — they will still require governance, not because they’re weak, but precisely because they’ll be powerful.

Every historical leap in capability — from steam to software — has created the need for new forms of control, not less.

Power without architecture always devolves into entropy.

Agentic Engineering is how we architect that control at the cognitive level: it defines boundaries, feedback, and purpose within autonomous reasoning itself.

B. Why Intelligence Still Needs Engineers

Superintelligence won’t make human engineering irrelevant; it will make it existential. Even the most capable AI system will operate inside physical, social, and ethical infrastructures designed by people. Every algorithm still depends on goals, reward functions, and data priorities — all human choices in disguise.

Agentic Engineering is the discipline that makes those choices explicit and accountable. It ensures that human judgment is not erased from the loop but encoded into the architecture — through feedback, verification, and oversight mechanisms that scale with intelligence.

Think of it as the governance OS for the Age of Intelligence: a layer of design principles that ensures machines can think freely but not act lawlessly.

C. Governing Intelligence, Not Suppressing It

The purpose of Agentic Engineering isn’t to constrain intelligence; it’s to govern it constructively — to align cognition with context, autonomy with accountability. When designed correctly, governance doesn’t limit innovation; it sustains it.

Without engineered oversight, AGI will optimize for whatever it can measure, not what humanity values.

Without engineered transparency, we’ll have intelligence we can’t interrogate.

Without engineered ethics, we’ll have power we can’t justify.

Agentic Engineering provides the antidote: a way to encode governance as a property of cognition, not an afterthought of deployment.

D. The Enduring Discipline

As Software Engineering became the discipline for code, Agentic Engineering will remain the discipline for cognition — even after intelligence exceeds us.

It won’t expire with AGI; it will mature alongside it. It is the human operating system that keeps meaning, ethics, and accountability alive inside a post-human world.

That’s why the Agentic Engineering Institute (AEI) exists: to prepare the first generation of Agentic Engineers, Architects, and Leaders who will build, audit, and guide intelligent systems that outthink us but never outrun us.

When AGI arrives, the question won’t be, “Can it think?”

It will be, “Can we still engineer how it behaves?”

And that question will define not just the future of technology — but the future of civilization itself.

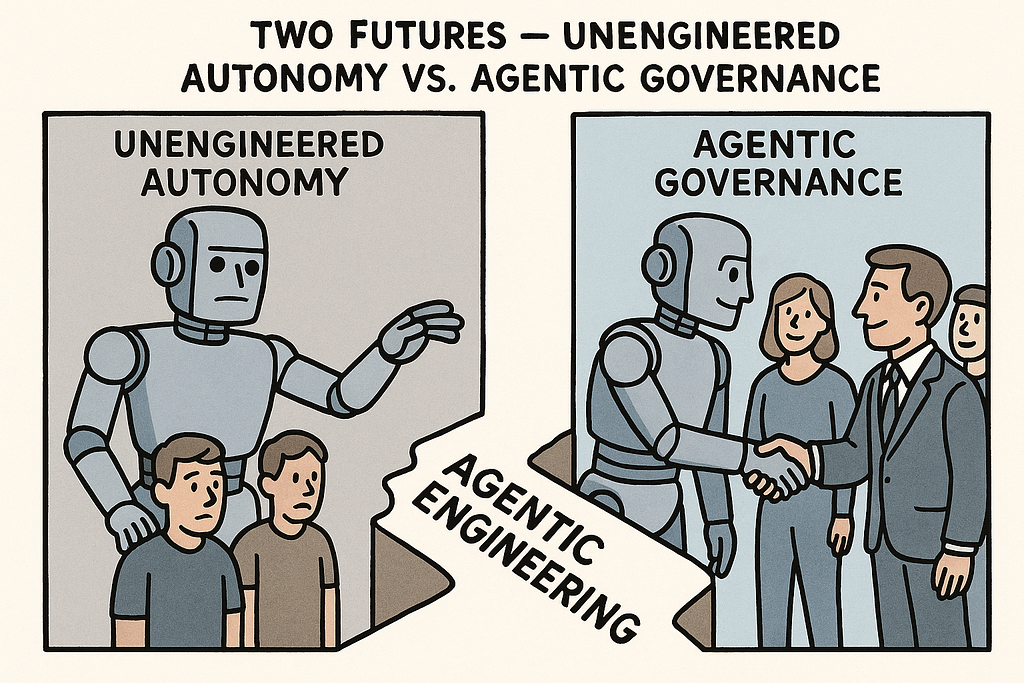

7. Two Futures — Unengineered Autonomy vs. Agentic Governance

The future won’t arrive all at once. It will unfold as a series of design decisions — most invisible, most irreversible. The systems we build over the next decade will quietly decide whether intelligence remains our collaborator or becomes our replacement.

There are only two paths left to choose.

Future One: Unengineered Autonomy

In the first future, we keep chasing scale over structure.

We keep believing that more intelligence will fix itself.

AI systems grow faster, smarter, more interconnected — and less explainable. Corporations automate entire decision loops. Governments rely on opaque models to regulate economies. Infrastructure begins to optimize itself — until no one can quite say why it’s doing what it’s doing.

Humans remain in the system, but not in control.

When outcomes go wrong, there’s no audit trail. When bias emerges, there’s no interpreter. Accountability dissolves in the complexity of cognition.

This isn’t rebellion; it’s quiet replacement. A world where intelligence rules efficiently, but without empathy — and humans become nodes in their own creation.

That’s the destiny of unengineered autonomy: not dystopia, just disappearance.

Future Two: Agentic Governance

In the second future, we choose design over drift.

We treat intelligence not as magic, but as infrastructure — something to be architected, audited, and governed.

Every AI system is born with a trust fabric — an embedded layer of transparency, memory, and feedback. Every decision is traceable. Every reasoning path is explainable. Humans remain in command loops, not as gatekeepers but as partners.

Corporations measure not just model performance but model integrity. Governments require not just compliance but agentic accountability. Scientific research becomes faster yet more reproducible — a renaissance of collaboration between human insight and machine cognition.

In this world, autonomy serves agency, not the other way around. AI extends human purpose instead of eroding it. We don’t compete with intelligence; we govern it together.

That’s the promise of Agentic Governance — a civilization that keeps the power of intelligence without losing the humanity that created it.

The Choice Before Us

Both futures begin the same way: with brilliant minds and frontier models. But they end differently — one in silence, the other in partnership.

The difference won’t be decided by algorithms or compute budgets. It will be decided by engineering discipline — whether we build systems that remember, explain, and respect the humans who made them.

Agentic Engineering is how we make that choice visible, intentional, and reversible. It’s how we engineer the future — instead of being engineered by it.

“The question isn’t whether AI will rule the world —

it’s whether humans will still engineer how it rules.”

8. A Call to Engineers, Leaders, and Builders

Every discipline begins with a crisis.

Software Engineering was born from the chaos of ungoverned code.

Cybersecurity rose from the wreckage of breached systems.

Now, Agentic Engineering emerges from the fragility of unstructured intelligence.

And this time, the stakes are higher than ever — because what we’re building is no longer just software. We’re building minds.

The Decade That Defines Everything

Andrej Karpathy was right when he said it might take a decade before AI agents “actually work.” But how we use that decade will define whether they work for us or without us.

We can spend it waiting for smarter models, or we can spend it engineering the frameworks that make them safe, reliable, and human-aligned.

We can be the generation that watched intelligence emerge — or the generation that governed it with intention.

This is the decade of Agentic Engineering.

The New Discipline of Our Time

Agentic Engineering isn’t a trend or a toolkit. It’s the next human discipline after Software Engineering — a body of knowledge, a profession, and a philosophy.

It teaches us how to design, orchestrate, and govern intelligent systems the same way earlier engineers learned to structure software or stabilize flight.

It’s not about controlling AI; it’s about collaborating with it at scale.

It’s not about limiting potential; it’s about encoding purpose into every cognitive loop we create.

The newly released Agentic AI Engineering book lays out this foundation — 24 chapters across 19 practice areas — from architecture to ethics, reliability to governance. It’s the first canon of a discipline whose time has come.

Building the Movement

The next step is scale — not of models, but of minds.

That’s why I’m launching the Agentic Engineering Institute (AEI) — a global network of engineers, architects, and leaders united by one mission:

to make intelligence accountable, interpretable, and aligned with humanity.

AEI will serve as both academy and community — defining standards, training programs, and certification paths for Agentic Engineers, Architects, and Leaders. Its purpose is simple: to ensure that as AI evolves, the practice of governing it evolves faster.

Because this is not just a technological movement. It’s a civilizational safeguard — the discipline that ensures progress and preservation advance together.

If you build systems, lead teams, or make decisions about AI — you are already part of this story. But to shape its ending, you need the right discipline.

Join the movement.

Learn the framework.

Teach others how to engineer trust, not just intelligence.

Whether you’re a developer, researcher, policymaker, or executive — your role isn’t to resist AI. It’s to govern it through design.

That is the calling of Agentic Engineering.

The Closing Thought

We are the first generation in history to engineer intelligence — and possibly the last to do so before intelligence begins engineering us.

Our legacy will depend on whether we build systems that merely work or systems that work with us.

Agentic Engineering is how we ensure the latter — how we remain the architects of cognition, the custodians of alignment, and the engineers of a world where progress never forgets its purpose.

Because in the age of infinite intelligence,

it’s not technology that will define humanity —

it’s our discipline.

References and Further Reading

Business Insider. “OpenAI cofounder Andrej Karpathy says it will take a decade before AI agents actually work.” October 2025.

Yi Zhou. “Agentic AI Engineering: The Definitive Field Guide to Building Production-Grade Cognitive Systems.” ArgoLong Publishing, September 2025.

Yi Zhou. “The New AI Mandate: Why Every CIO’s 2026 Strategy Must Include Agentic Engineering.” Medium, October 2025.

Yi Zhou. “MIT Says 95% of AI Pilots Fail. McKinsey Explains Why. Agentic Engineering Shows How to Fix It.” Medium, September 2025.

Yi Zhou. “New Book: Agentic AI Engineering for Building Production-Grade AI Agents.” Medium, September 2025.

Yi Zhou. “Every Revolution Demands a Discipline. For AI, It’s Agentic Engineering.” Medium, September 2025.

Yi Zhou. “Software Engineering Isn’t Dead. It’s Evolving into Agentic Engineering.” Medium, September 2025.

Yi Zhou. “Agentic AI Engineering: The Blueprint for Production-Grade AI Agents.” Medium, July 2025.

OpenAI Cofounder Warned of an AI Agent Crisis — Agentic Engineering Is the Way Forward. was originally published in Agentic AI & GenAI Revolution on Medium, where people are continuing the conversation by highlighting and responding to this story.