- Dec 11, 2025

C3 Just Crushed DeepSeek-OCR and Redefined AI Compression for Enterprise AI

- Engineer Forum

- 0 comments

In the fall of 2025, something unexpected happened in the AI world. Companies with huge AI budgets discovered that their most advanced models were failing at a very old problem. Not creativity. Not reasoning. Context.

Even the largest context windows on the market were slowing down, driving up costs, and producing inconsistent results when fed long documents, multi day conversations, or large knowledge bases. Enterprises that expected AI to replace entire workflows were forced to face a simple truth.

Long context is expensive. Long context is fragile. Long context breaks.

So research teams began to hunt for a breakthrough. Not another massive model. Not another 200K token window. A new way to compress information so that AI agents could think more efficiently.

DeepSeek-OCR delivered the first shock. C3 delivered the second, far bigger one.

What happened next is now reshaping how enterprise AI systems are engineered.

The DeepSeek-OCR Breakthrough

DeepSeek-OCR proposed a novel method. Convert pages of text into images, compress them into visual latent tokens, and feed these compact representations to an LLM. This reduced token counts dramatically and unlocked efficiency gains.

DeepSeek-OCR’s results were strong at moderate compression levels.

At roughly 10x compression it achieved about 97% reconstruction precision.

At about 20x compression precision fell to about 60%.

This proved that optical pathways can preserve meaningful information with far fewer tokens. It was an important leap in the search for scalable long context AI.

However, optical compression inherits limitations from the visual domain. Rendering noise. Layout inconsistencies. Resolution constraints. These introduce structural boundaries that worsen as compression ratios increase.

The research community wanted to know whether a purely linguistic approach could outperform vision.

C3 Raises the Ceiling

C3, Context Cascade Compression, answered with one of the most striking performance jumps of the year. It compresses text directly into latent tokens using a small encoder model. A larger decoder model then reconstructs the text from those tokens.

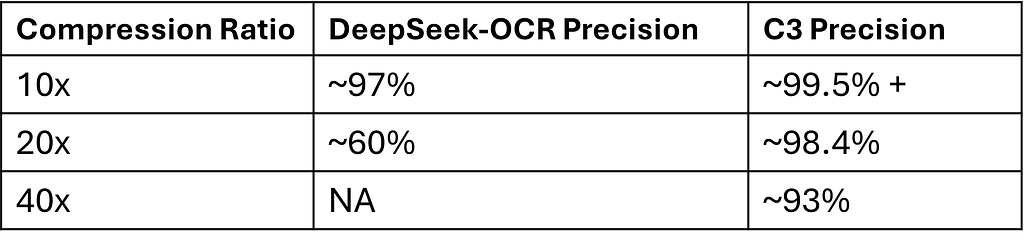

The numbers are decisive.

Table: Reconstruction Precision Comparison

C3 retains near perfect fidelity even at 20x compression. It remains stable at nearly 40x compression with about 93% precision. DeepSeek-OCR does not operate effectively in that range.

This is not a marginal improvement. It redefines the upper bound of what long context compression can do.

C3’s consistency matters because enterprises depend on predictability. Its sequential decay pattern means errors tend to appear near the end of the text rather than randomly throughout, which supports debugging, safety, and auditability.

Why Enterprise Teams Should Care

Enterprises adopt AI for ROI, not novelty. Compression directly affects three core business drivers.

1. Cost optimization

Every token removed from a prompt reduces compute usage. C3 can reduce token counts by 10x to 40x while maintaining accuracy. This lowers inference spend at scale.

2. Accuracy across long horizon tasks

Enterprise workflows such as multi document analysis, multi step reasoning, customer interaction histories, or retrieval enriched tasks require stable context retention. High fidelity compression improves end to end performance.

3. Reliable system behavior

Predictable reconstruction errors matter for debugging and compliance. C3’s structured decay is more aligned with enterprise reliability needs than the diffuse errors seen in optical compression at high ratios.

Compression is not an isolated optimization. It is a foundation for scalable agentic systems.

Compression as a Core Practice Area of Agentic Engineering

Agentic Engineering is the emerging discipline focused on designing enterprise grade AI systems that maximize ROI through accuracy, cost efficiency, and reliability. It treats AI deployments as engineered systems rather than isolated models.

Context compression is one of its essential practice areas because it impacts:

1. How much context agents can process.

2. How much it costs them to process it.

3. How accurately they retain information across extended workflows.

Breakthroughs like DeepSeek-OCR and C3 directly shape the architecture, memory, and performance of enterprise AI agents. They are not theoretical curiosities. They are operational levers that determine competitive edge.

How AEI Helps Teams Apply These Breakthroughs

The Agentic Engineering Institute (AEI) was created to help enterprises and professionals turn breakthroughs like C3 into practical, high ROI implementations. AEI provides up to date best practices, design patterns, and system architectures for applying advanced compression, adaptive context strategies, retrieval frameworks, governance, and agent orchestration.

AEI’s focus is practical advantage.

Faster systems. Lower costs. Higher accuracy. Clearer engineering discipline.

Exactly what enterprises need to deploy agents that matter.

AEI is currently in beta, with a public launch on January 5th, 2026. Professionals who want early access to Agentic Engineering Body of Practices (AEBOP), training courses, and community can register now for AEI’s beta.

The Compression Era Has Begun

DeepSeek-OCR opened a new path. C3 expanded it with a data driven leap. Agentic Engineering provides the discipline to implement these advances. AEI gives practitioners everything they need to stay ahead.

The next generation of enterprise AI will not be defined by how many tokens a model can accept. It will be defined by how efficiently systems compress, store, retrieve, and reason with information.

C3 Just Crushed DeepSeek-OCR and Redefined AI Compression for Enterprise AI was originally published in Agentic AI & GenAI Revolution on Medium, where people are continuing the conversation by highlighting and responding to this story.