- Jan 2, 2026

Introducing the Agentic Engineering Institute (AEI): Top 10 Reasons You Should Join Now in 2026

- AEI Digest

- 0 comments

In 2026, AI failures will no longer be explained away as “early days.”

For years, enterprises accepted missed targets, stalled pilots, and silent failures because AI was new. That grace period is over.

Today’s reality is stark:

90–95% of AI initiatives still fail to reach sustained production value

Fewer than 12% deliver measurable ROI

More than half of GenAI programs stall due to governance and integration failures

These numbers are not from startups experimenting in labs. They are from large enterprises deploying AI into real workflows, real customers, and real regulatory environments.

And by 2026, the question has changed.

It is no longer: “Can AI work?”

It is: “Why was this system allowed to operate this way?”

A Familiar Story, Now with Real Consequences

If you are a tech leader, architect, or senior engineer, you have likely lived this already.

An AI agent makes a decision that looks reasonable.

Nothing crashes. No alerts fire.

Weeks later, a customer escalates, an audit flags anomalies, or a business leader asks uncomfortable questions.

Suddenly, the room is quiet.

Because the failure wasn’t a bug.

It wasn’t a model outage.

It was an engineering gap.

And in 2026, those gaps no longer stay technical. They become operational, reputational, and personal.

The Analogy Enterprises Are Missing

This moment is not unprecedented.

In the early days of distributed systems, companies blamed outages on “immature infrastructure.”

Before security engineering matured, breaches were treated as bad luck.

Before SRE existed, downtime was “just part of scaling.”

Each time, the technology was not the real problem.

The discipline arrived late.

AI is now at that same inflection point.

Autonomous systems behave less like software and more like junior operators acting at machine speed. Yet most organizations still engineer them as if they were deterministic code.

That mismatch is why AI keeps failing quietly and expensively.

This Is Why Agentic Engineering and AEI Now Exist

Autonomous AI systems reason, plan, and act.

They cross system boundaries.

They evolve behavior without code changes.

They require a new engineering discipline.

That discipline is called Agentic Engineering.

And the institution formalizing it is the Agentic Engineering Institute (AEI).

AEI exists because 2026 is the year enterprises can no longer rely on optimism, documentation, or informal best practices to govern autonomous systems.

What follows are the Top 10 reasons professionals are joining AEI now — not out of curiosity, but because the cost of waiting has become measurable, repeatable, and personal.

1. 2026 Is the Year AI Reality Fully Overtakes Legacy Engineering

By 2026, AI is no longer experimental. It is embedded in customer journeys, revenue workflows, and compliance-adjacent systems.

And it does not behave like the software we were trained to engineer.

In production, modern AI systems:

• change behavior without code changes

• act across tools, APIs, and organizational boundaries

• fail through interactions, not isolated defects

• produce decisions that pass basic checks and still cause harm

This is already happening at scale.

Despite unprecedented investment, 90–95% of AI initiatives still fail to reach sustained production value. The gap is not intelligence. It is engineering discipline.

Legacy engineering assumes determinism: predictable behavior, localized failure, controlled change. Autonomous AI violates each of those assumptions.

By 2026, organizations are no longer judged on whether AI works in principle, but on whether its behavior was engineered, constrained, and defensible in practice.

That is the moment legacy engineering stops being sufficient — and a new discipline becomes unavoidable.

2. Most AI Failures Are Engineering Failures, Not Model Failures

Across enterprises, AI failures rarely look dramatic at first.

An agent produces a decision that seems reasonable.

Logs show no errors.

Dashboards stay green.

Then the consequences surface quietly: incorrect recommendations compound, compliance assumptions break, customer trust erodes, or downstream teams discover decisions they cannot explain or defend.

This is why the data looks so grim. Fewer than 12% of organizations report measurable AI ROI, not because models lack capability, but because reasoning, memory, context, and decision boundaries are rarely engineered as explicit, testable systems.

When intelligence is treated as an emergent side effect rather than an engineered capability, failures do not announce themselves. They accumulate.

By the time they are visible, they are no longer technical issues. They are operational, reputational, and leadership problems.

This is the defining shift of autonomous AI:

failures are no longer loud, localized, or easy to roll back. They are subtle, systemic, and expensive.

3. Trust, Not Capability, Is What Blocks AI at Scale

At this point, lack of capability is no longer what holds AI back.

Leaders approve pilots.

Architects design scalable systems.

Engineers ship working solutions.

And then progress stalls.

Not because the AI cannot perform, but because no one can confidently stand behind its behavior.

Security teams hesitate because autonomy is opaque.

Compliance teams hesitate because decisions are hard to trace.

Operations hesitate because failures are difficult to detect and contain.

As a result, systems remain trapped between pilot and production.

Industry data reflects this clearly: more than half of GenAI initiatives stall due to governance and integration failures, not technical feasibility. The bottleneck is trust at scale.

Crucially, trust does not emerge from better explanations or more documentation. It emerges from engineering: observability, traceability, enforceable constraints, and evidence generated by the system itself.

Without engineered trust, autonomy remains impressive but unapprovable.

And in 2026, unapprovable systems do not scale.

4. Paper Governance Breaks the Moment Autonomy Acts

Most enterprises still govern AI the same way they govern traditional software: policies, review boards, risk registers, and approval documents.

That approach assumes systems behave predictably after release.

Autonomous AI does not.

Once systems reason, plan, and act on their own, governance that exists only on paper becomes performative. Policies do not enforce themselves. Risk registers do not detect drift. Approval slides do not generate evidence when something goes wrong.

This is why governance failures surface after deployment, not before it.

When an agent crosses a boundary, makes an unexpected decision, or combines tools in a novel way, static controls offer no protection. Teams are left reconstructing intent and behavior after the fact, often under audit or executive pressure.

Effective governance for autonomous systems must run alongside the system:

constraints must be enforced in real time

decisions must be traceable by design

evidence must be generated automatically, not retroactively

Without runtime governance, AI oversight is symbolic.

And in 2026, symbolic governance is no longer acceptable.

5. Agentic Engineering Is the Missing Discipline Autonomous Systems Require

At this point, the pattern should be clear.

AI failures are not caused by weak models.

They are not caused by lack of ambition.

They are caused by applying the wrong engineering discipline to systems that no longer behave like traditional software.

Software Engineering assumes deterministic execution.

MLOps focuses on model lifecycle, not system behavior.

Traditional governance remains static and external to runtime.

Autonomous systems break all three assumptions.

They reason instead of execute.

They adapt instead of remain fixed.

They act across boundaries instead of staying contained.

Agentic Engineering exists to address this gap directly. It treats intelligence, trust, and governance as engineered system properties, not emergent side effects or afterthoughts.

Without this discipline, organizations are left improvising controls after incidents occur.

With it, autonomy becomes something that can be designed, evaluated, approved, and defended.

This is the inflection point where experimentation must give way to engineering.

6. This Is Exactly Why the Agentic Engineering Institute Exists

Once the discipline gap is visible, the next question becomes unavoidable:

Where does this discipline actually live?

Not in vendor documentation.

Not in ad-hoc internal playbooks.

Not in one-off courses or architecture decks.

Autonomous systems require a shared, external, professional standard that teams can design to, leaders can approve against, and organizations can defend under scrutiny.

That is why the Agentic Engineering Institute (AEI) was created.

AEI exists to do for autonomous AI what established institutions did for:

Software Engineering when systems became mission-critical

Security Engineering when breaches became systemic risk

SRE when uptime became an executive concern

It formalizes Agentic Engineering as a profession, not a trend.

For many leaders, architects, and engineers, this is the first time the chaos surrounding autonomous AI resolves into something solid: a discipline, a standard, and a place to learn it properly.

From here forward, the question is no longer whether such an institution is needed.

It is whether you adopt the discipline early or inherit the consequences later.

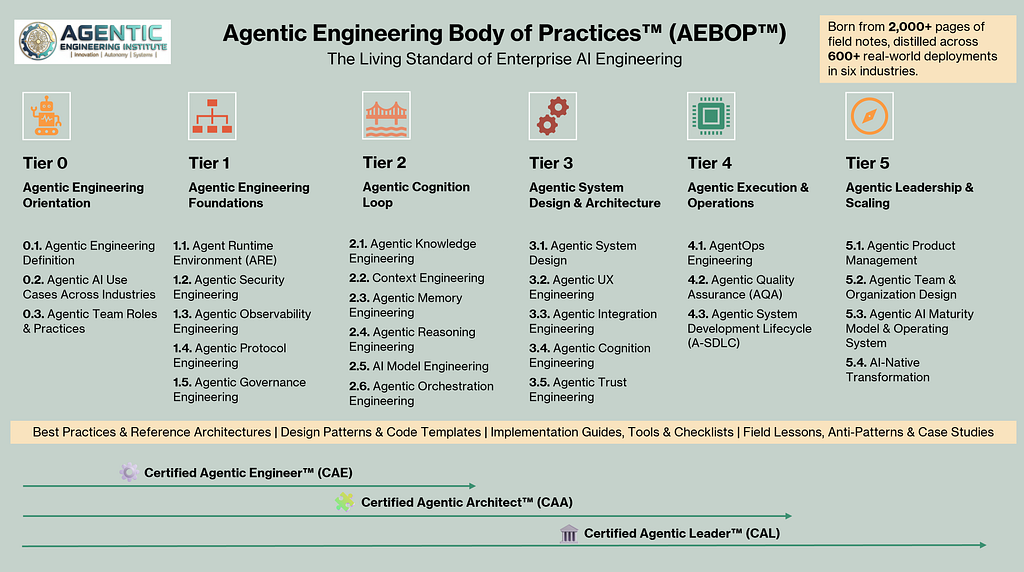

7. AEI’s AEBOP Is the Living Standard for Enterprise AI Engineering

Most AI guidance fails because it describes how intelligent systems should behave, not how they behave under real production pressure.

Real agentic systems drift.

They interact in unexpected ways.

They fail through combinations, not components.

The AEI’s Agentic Engineering Body of Practices (AEBOP™) exists for this reality.

AEBOP is a living, continuously updated field guide that defines how intelligent systems are built, operated, and governed in production. It is the professional standard for engineers, architects, and leaders designing enterprise-grade agentic systems.

It is not theoretical.

AEBOP is distilled from 2,000+ pages of field notes, across 600+ real-world deployments in six industries. Every pattern, safeguard, and anti-pattern reflects lessons learned under real deadlines and real risk.

Maintained by the Agentic Engineering Institute, AEBOP turns raw field experience into practical engineering assets:

Proven best practices and reference architectures

Canonical design patterns and anti-patterns

Code examples, templates, and readiness checklists

Maturity ladders and operational playbooks

Organized across 26 practice areas and six mastery tiers, and updated continuously, AEBOP keeps AEI members ahead of both failures and the curve.

Without a living standard, teams improvise and relearn hard lessons through incidents.

With AEBOP, autonomy becomes something you can design, scale, and defend.

8. AEI Establishes the Three Rigor Levels 2026 Demands

The failure pattern across enterprise AI is now unmistakable.

Most AI initiatives do not fail because teams lack models, talent, or ambition. They fail because engineering rigor is absent, assumed, or applied too late.

Industry data consistently shows that the majority of AI projects fail to reach sustained production value because systems are deployed without clear, enforceable standards for reliability, scale, or accountability. Rigor is often discovered only after incidents, audits, or blocked launches.

The issue is not that every system requires maximum rigor from day one.

The issue is that rigor is rarely made an explicit design decision.

Through AEBOP, the Agentic Engineering Institute establishes three rigor levels that autonomous systems must be consciously assessed against and intentionally applied based on business context and application exposure:

Production-grade rigor for reliability, observability, and operability

Enterprise-grade rigor for scale, integration, and lifecycle management

Regulatory-grade rigor for auditability, traceability, and defensibility

Failures occur when rigor is assumed instead of engineered, applied inconsistently, or introduced only after autonomy has already expanded.

By 2026, successful organizations will not be those that apply blanket rigor everywhere, but those that design for the right rigor upfront and evolve it deliberately as systems mature.

Agentic Engineering turns rigor from a reactive fix into a first-class engineering decision.

9. AEI Compresses the Path From Pilot to Production by 2–3×

Across industries, organizations keep paying for the same agentic failures.

Unbounded autonomy.

Opaque decisions.

Brittle integrations.

Governance gaps discovered too late.

Each cycle costs months of redesign, stalled launches, audit friction, and lost credibility. The root cause is not lack of effort. It is the absence of shared, field-tested standards.

AEI helps organizations adopt AEBOP-driven standards that turn hard-won lessons into institutional knowledge, enabling teams to move from pilot to production 2–3× faster.

Instead of rediscovering failure modes through incidents and rework, teams:

design against known patterns and anti-patterns

apply readiness gates before scale

make standards-based decisions instead of ad-hoc tradeoffs

The result is not just fewer failures, but faster, more confident approval and execution.

By 2026, advantage will not come from running more pilots. It will come from converting pilots into production systems reliably and repeatedly.

AEI is how organizations make that transition predictable and fast.

10. The Economics and Career Math Are Already Clear

At this point, the decision is no longer theoretical. The numbers are visible and repeatable.

The Economic Reality

A single moderate agentic incident typically triggers:

$500K–$1.2M in remediation, delivery delays, and compliance effort

Months of redesign, rework, and stalled roadmaps

Executive time diverted from growth to damage control

These are not rare edge cases. They are the predictable cost of deploying autonomous systems without engineered intelligence, trust, and runtime governance.

More importantly, these costs compound over time.

Discipline engineered early is incremental. Discipline retrofitted later is disruptive and expensive.

The Career Reality

The same dynamic plays out at the individual level:

Engineers inherit on-call risk for systems they cannot fully observe or control

Architects absorb blame for systemic gaps that were never standardized

Leaders carry personal exposure when failures escalate into audits, customer trust issues, or board scrutiny

By 2026, the market will distinguish clearly between professionals who can design, operate, and defend agentic systems and those who can only experiment with them.

The Consequence

The Agentic Engineering Institute exists for those who choose to act before this distinction hardens.

Joining AEI is not about following an AI trend. It is about:

reducing avoidable cost and risk

accelerating the path from pilot to production

protecting professional credibility as autonomy becomes unavoidable

The economics are already decided.

The career math is already clear.

The only remaining question is when you act.

What Action Looks Like in 2026

By 2026, autonomous AI is no longer judged by novelty or promise.

It is judged by outcomes, reliability, and defensibility.

The practical question is no longer whether to act, but how deliberately to prepare — organizationally and individually — for a permanent shift in how work, authority, and responsibility are distributed.

For Organizations

For enterprises deploying or scaling agentic systems, 2026 is a competitive inflection point.

Autonomous AI is accelerating disruption from peers and non-traditional entrants alike. Digitally native firms are using autonomy to move faster, operate at lower cost, and reshape value chains. Responding with pilots and ad-hoc practices is no longer sufficient.

Leading organizations are therefore acting deliberately:

Establishing formal partnerships with the Agentic Engineering Institute to adopt AEBOP as an enterprise-wide engineering standard

Using AEBOP to align teams on how intelligence, trust, and governance are designed and evolved across systems

Applying maturity gates and runtime standards to shorten the path from pilot to production and reduce redesign cycles

Treating Agentic Engineering as strategic infrastructure rather than project-specific expertise

In 2026, Agentic Engineering is no longer just about managing AI risk.

It is about protecting value and competing effectively in an AI-native market.

For Individuals

For engineers, architects, and technical leaders, 2026 is also a career inflection point.

AI-driven autonomy is reshaping roles faster than job titles or org charts can keep up. As systems take on more decision-making, responsibility shifts upward to those who design, integrate, and govern them.

This creates a clear form of career disruption:

Routine implementation skills commoditize quickly

System-level judgment, design discipline, and accountability become differentiators

Professionals closest to runtime behavior carry more risk and more influence

In response, many individuals are:

Preparing for AI disruption by learning Agentic Engineering as a formal discipline, not a collection of tools

Using AEBOP-aligned training to build production-grade competence that remains relevant as architectures change

Pursuing certification to make that competence explicit and transferable

Participating in practitioner communities focused on real-world failures, not hype cycles

This is less about chasing credentials and more about staying professionally viable as autonomy becomes the default operating model.

The 2026 Posture

2026 is when autonomous AI stops being something organizations experiment with and becomes something they are expected to engineer deliberately.

Organizations that prepare early establish standards instead of inheriting risk.

Professionals who prepare early shape systems instead of being disrupted by them.

That is not marketing language.

It is the practical posture emerging across serious enterprise AI programs.

Introducing the Agentic Engineering Institute (AEI): Top 10 Reasons You Should Join Now in 2026 was originally published in Agentic AI & GenAI Revolution on Medium, where people are continuing the conversation by highlighting and responding to this story.