- Oct 26, 2025

Prompt Engineering 2.0

- Engineer Forum

- 0 comments

Prompt Engineering 2.0 Is the New Alignment Layer — Redefining How Intelligence Is Built, Controlled, and Scaled

As models plateau and post-training alignment reaches its limits, Prompt and Context Engineering are emerging as the dynamic control plane of intelligence — the foundation of Agentic AI Engineering and the future of responsible, adaptive cognition.

1. The Alignment Paradox — When Alignment Reduces Intelligence

Over the past several years, the AI community has achieved what once seemed impossible: models that are polite, safe, and broadly aligned with human values. Techniques such as reinforcement learning from human feedback (RLHF) and constitutional fine-tuning have made large language models more coherent and less harmful than ever before.

Yet beneath this progress lies a growing unease. As these systems become better behaved, they also become less diverse in thought. Their responses sound smooth but uniform — fluent echoes of the same familiar reasoning. Creativity, spontaneity, and interpretive depth quietly fade.

This phenomenon, known as mode collapse, reveals a deeper problem: the same mechanisms that constrain risk also constrain imagination. Alignment has made models agreeable, but not necessarily intelligent in the richer human sense of originality and insight.

The paradox is now clear:

In making AI safer, we have also made it less intelligent.

What began as a technical challenge of control has become a cognitive one. If alignment suppresses diversity, how can we preserve both safety and creativity — both reliability and range?

The answer, it turns out, did not come from retraining or architecture. It came from something far simpler — a change in how we prompt.

2. The Turning Point — Verbalized Sampling and the Return of Diversity

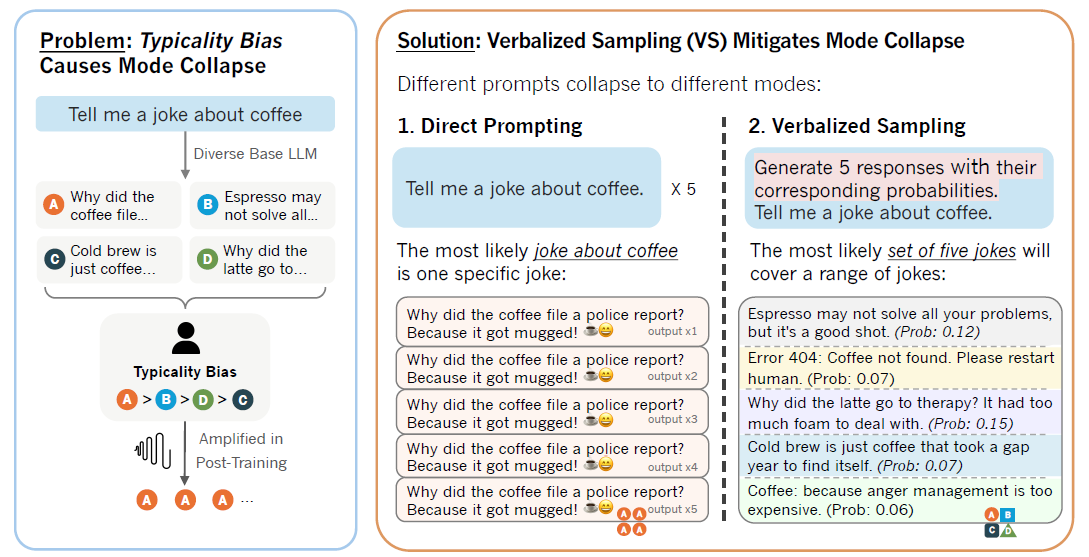

Most recently, the researchers from Stanford and other university uncovered the underlying cause of this narrowing effect: typicality bias in human feedback.

When people rate model outputs, they instinctively favor responses that sound familiar and fluent. Over millions of annotations, that preference becomes reinforcement, teaching models to imitate what feels right instead of what might be true or novel.

The researchers proposed a surprisingly simple remedy — Verbalized Sampling. Rather than retraining the model, they restructured the prompt — asking the system to generate multiple responses and verbalize the probability of each. This subtle shift reopened the probability space that alignment had compressed. The model began producing a wider range of reasoning paths and creative alternatives — the diversity that alignment had hidden, not erased.

Across creative-writing, dialogue, and reasoning benchmarks, this approach restored 1.6× to 2.1× greater generative diversity than standard prompting, without any retraining or drop in quality. The implication was profound: the frontier of control had moved. Alignment was no longer confined to training; it could now be governed at inference time through language and structure.

Typicality bias is a fundamental cause of mode collapse and the verbalized sampling prompting method is a viable solution (see Ref-1).

This discovery reframed alignment as an interactive discipline — not a one-time adjustment to model weights, but a continuous negotiation through prompts and context.

Control, it turned out, resides not in the parameters of the model, but in the words that shape its cognition.

That realization opened the door to a new practice — Prompt Engineering 2.0 — where prompts are not mere instructions, but instruments of alignment: the interface between human intent and machine intelligence.

3. The Rise of Prompt Engineering 2.0

When Verbalized Sampling revealed that alignment could be restored through prompt design rather than retraining, it marked more than an empirical breakthrough — it exposed a conceptual turning point.

Control over intelligence had moved from weights to words. Alignment was no longer just a training objective; it had become a linguistic and cognitive interface.

This realization gave rise to Prompt Engineering 2.0 — the systematic practice of designing, structuring, and governing the way AI systems think at inference time.

The first generation of prompting had been largely artisanal: clever phrasing, creative trial-and-error, viral prompt “hacks.” Effective, but not repeatable. There was no shared foundation, no language of design, and no engineering rigor.

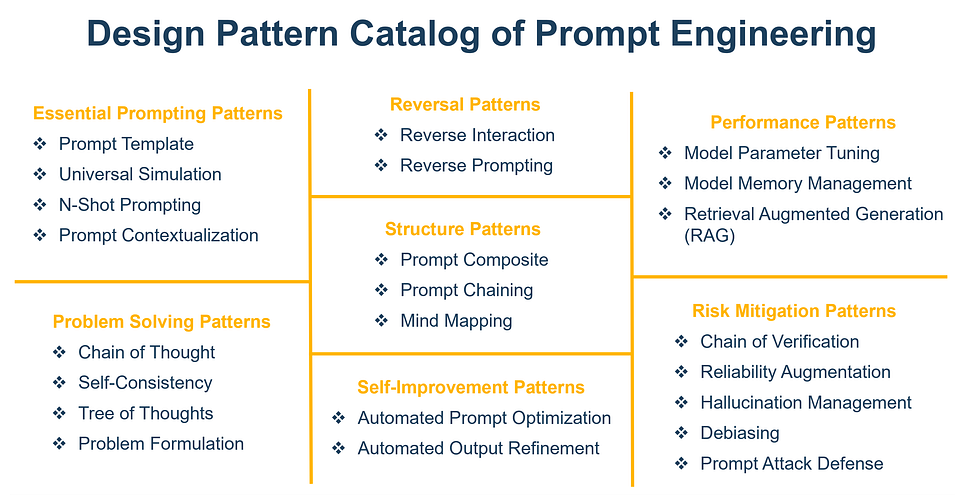

That changed with the publication of my book Prompt Design Patterns: Mastering the Art and Science of Prompt Engineering. It established the first comprehensive framework for this discipline — codifying 24 reusable cognitive patterns across structure, self-improvement, problem-solving, performance, and risk mitigation. These patterns turned prompting from intuition into infrastructure: composable, testable, and governable.

Prompt Design Patterns reframed prompting as a form of cognitive architecture — not merely an interface for tasks, but a design layer for reasoning itself. Each pattern defines how an AI system interprets instructions, explores alternatives, validates its logic, and manages uncertainty. Together, they provide a grammar for building intelligence that is creative yet safe, autonomous yet aligned.

Prompt Engineering 2.0 builds on these foundations to establish a full-fledged discipline — one that integrates linguistics, cognitive psychology, and systems design into a unified engineering practice. It transforms the prompt from a static instruction into a dynamic protocol — a living structure that governs how intelligence unfolds in context.

In this new paradigm, prompting is no longer an afterthought; it is the operating interface of cognition. It enables alignment, creativity, and control to coexist — not through retraining, but through design. It is, in essence, the discipline that translates human intent into machine understanding — and the first visible layer of what Agentic AI Engineering calls the active alignment stack.

4. The Agentic Alignment Stack

As Prompt Engineering matured into a repeatable discipline, the next challenge became clear:

How do we scale it into an architecture — a system that can continuously align intelligence, context, and governance across time?

That question led to the framework I introduced in Agentic AI Engineering — the Agentic Alignment Stack.

The Agentic Alignment Stack describes alignment not as a single process but as a multi-layered continuum, spanning from static model knowledge to dynamic cognition. Each layer defines how intelligence is constructed, activated, and steered in real time.

1) Model Layer — Foundational Intelligence.

This is the trained substrate of knowledge and reasoning. It defines what the model knows, not how it behaves. It is powerful yet inert — potential without purpose.

2) Instruction Layer — Behavioral Orientation.

Here, core values and response norms are encoded through supervised fine-tuning or RLHF. It establishes a moral and stylistic compass but remains largely static after training.

3) Prompt and Context Layer — Dynamic Alignment.

This is the living layer — the one Prompt Design Patterns first made visible and Agentic AI Engineering formalized. Prompt Engineering structures reasoning; Context Engineering maintains continuity across sessions, users, and environments. Together they provide inference-time control — adapting reasoning, tone, and ethics dynamically. This layer is where alignment becomes a continuous dialogue between human and machine cognition.

4) Cognition and Governance Layer — Agentic Oversight.

At the top of the stack lies the system’s reflective capability — its ability to monitor, explain, and adjust its own reasoning. Governance is no longer external; it becomes embedded cognition. This layer operationalizes ethical alignment and self-correction through reasoning patterns, audit trails, and multi-agent collaboration.

In Agentic AI Engineering, this stack serves as the blueprint for building systems that are not merely trained but continuously aligned — systems that maintain coherence, responsibility, and creativity as living properties. It unites the disciplines of Prompt Engineering, Context Engineering, and Cognition Engineering into a single framework for designing intelligence that evolves safely and purposefully.

Each layer aligns to a different dimension of truth:

The Model Layer aligns to reality.

The Instruction Layer aligns to human values.

The Prompt and Context Layer aligns to intent and situation.

The Cognition Layer aligns to responsibility and reflection.

Together, these layers make alignment not an afterthought but an architecture — a continuous system of orchestration and oversight. This is the foundation of the Agentic Era: intelligence that is trained once and aligned forever.

5. From Prompts to Protocols: The Engineering of Meaning

If the Agentic Alignment Stack defines the architecture of intelligence, then Prompt Systems are how that architecture comes alive. They turn theory into operation — transforming language into governance and cognition into measurable performance.

In traditional AI practice, prompts were individual instructions. In Agentic AI Engineering, they become protocols: structured, versioned, and auditable frameworks that shape how intelligence interprets meaning, applies reasoning, and balances creativity with compliance.

From Design to Systemization

Prompt Systems integrate prompting, context management, and cognitive orchestration into cohesive runtime frameworks.

They organize reasoning into modular flows, manage long-term continuity through contextual memory, and embed reflection and verification directly into the process. Each prompt sequence acts as a cognitive micro workflow, defining how the model should reason, validate, and self-correct in a given scenario.

This systemization gives rise to PromptOps — the operational discipline that manages these prompt frameworks across environments and lifecycles. In PromptOps, prompts are not ad-hoc text snippets; they are governed assets — designed, versioned, tested, and continuously improved. The result is alignment as a living process, not a static event.

The Engineering of Meaning

In this new discipline, meaning itself becomes an engineering function. Prompts and contexts define how intelligence constructs understanding, how ambiguity is resolved, and how human intent is translated into reasoning pathways. They form the semantic infrastructure of cognition — a programmable interface between people and intelligent systems.

This is where Prompt Design Patterns and Agentic AI Engineering converge: the former codifies the cognitive building blocks, while the latter provides the architectural scaffolding to deploy them at scale. Together, they establish a full engineering stack for meaning — from micro-level design to enterprise-level governance.

6. Implications for the AI Ecosystem

The rise of the Agentic Alignment Stack and the operationalization of PromptOps are not technical footnotes — they are restructuring the entire AI ecosystem. As alignment becomes dynamic, every stakeholder in this new order faces both a challenge and an opportunity.

Enterprises.

For organizations, this shift transforms alignment from a one-time fine-tuning effort into a continuous operational discipline. Through Prompt Architectures and Context Governance, enterprises can now encode creativity, safety, and compliance directly into their cognitive workflows. Alignment becomes programmable — a living layer of governance expressed as reusable components. This is the practical realization of Agentic AI Engineering: a world where alignment is not retrained but engineered, versioned, and deployed through adaptive prompt systems.

Developers and Engineers.

For technical builders, Prompt Engineering literacy becomes as fundamental as coding literacy once was. Developers now design cognitive systems rather than deterministic programs — shaping reasoning flow, contextual memory, and ethical constraints at inference time. In PromptOps environments, they manage prompts as structured assets, tested for reproducibility and alignment. The new frontier of engineering is no longer syntax control but semantic orchestration — engineering the meaning by which intelligence operates.

Researchers.

For scientists, this evolution opens an entirely new field of inquiry: inference-time control and cognitive alignment. Prompt-level architectures provide experimental tools for understanding how reasoning, diversity, and self-correction emerge. Alignment research expands from optimizing parameters to exploring emergent cognition — how reasoning patterns evolve when systems interact with humans, context, and themselves.

Governments and Regulators.

For policymakers, the prompt layer becomes the new interface of accountability. Traditional regulation has focused on data and model training — static artifacts. But as alignment moves into inference-time systems, the real governance frontier is behavioral: how intelligence is prompted, contextualized, and audited in use. Future policy frameworks will demand prompt transparency, alignment observability, and context integrity — ensuring that the dialogue between humans and machines remains traceable, ethical, and fair.

Educators and Institutions.

For education, this transformation redefines what it means to be literate in the AI age. The new digital fluency is cognitive fluency — the ability to design, interpret, and govern intelligent systems through language and context. Universities are beginning to integrate Prompt Engineering, Context Engineering, and Agentic Design into curricula across computing, ethics, and communication. The next generation of professionals will not simply use AI — they will engineer alignment itself.

Across this ecosystem, a single principle unites all domains: alignment has become a relationship, not a state. It is the continuous negotiation between intelligence, context, and purpose — orchestrated through the active layers of the Agentic Alignment Stack.

Prompt Design Patterns provides the grammar of that relationship.

Agentic AI Engineering provides its architecture.

Together, they define a new foundation for how humanity builds, governs, and collaborates with intelligent systems — not through control, but through coherence in motion.

6. The Agentic Future: Trained Once, Aligned Forever

We are entering an era where intelligence is not just trained — it is orchestrated.

The foundation models of today will be the cognitive substrates of tomorrow: vast, pretrained engines of reasoning that remain largely fixed, while alignment, creativity, and governance evolve dynamically above them.

This is the principle of Trained Once, Aligned Forever — the defining paradigm of Agentic AI. It recognizes that the future of AI is not about building ever-larger models, but about engineering ever-smarter alignment systems that control, contextualize, and coordinate them.

Dynamic Alignment.

In this future, alignment is achieved not by retraining but by continuous orchestration. Prompts, contexts, and cognitive protocols form the living architecture through which models adapt to purpose and environment. A single foundation model can serve thousands of domains — each governed by its own prompt architecture and alignment schema.

Agentic Cognition.

AI systems will think in structured loops of reasoning, reflection, and self-evaluation — guided by prompt patterns, reinforced by context memory, and constrained by governance logic. These systems will learn not only what to answer, but how to know when an answer is responsible, verifiable, and complete.

Human-AI Symbiosis.

Humans will no longer instruct machines line by line; we will negotiate meaning with them. The interface between human cognition and machine cognition will become a two-way dialogue — fluid, interpretable, and co-creative. Prompt and Context Engineering will serve as the professional grammar of that dialogue, ensuring clarity, safety, and shared understanding.

Enterprise and Societal Governance.

Organizations will deploy alignment frameworks as operational infrastructure — living systems of policies, audits, and feedback loops encoded directly into prompts and contexts. Regulation will evolve toward transparency standards that treat alignment as a continuous service rather than a static certification. Governance will move from compliance checklists to alignment observability — the ability to monitor cognition itself.

In this world, Agentic AI Engineering becomes the unifying discipline — blending Prompt Engineering, Context Engineering, and Cognition Engineering into a single practice of building intelligent systems that remain aligned by design.

The ultimate goal is not perfect control, but sustainable coherence — intelligence that can adapt responsibly without losing its moral and contextual compass.

The new hierarchy of progress is clear:

Models will be trained once.

Alignment will live forever.

This is the Agentic Future — a future where alignment is not an afterthought, but an architecture; where governance is not imposed, but embedded; and where the dialogue between humans and machines becomes the most important system we will ever design.

7. Call to Action

The discipline of AI is changing — rapidly, fundamentally, and irreversibly. We are moving from training systems to engineering intelligence, from static models to living cognition, and from human oversight to agentic alignment by design.

If you are building, leading, or governing AI systems, now is the moment to master this transition.

Explore Agentic AI Engineering — the foundational framework for designing, scaling, and aligning agentic systems in enterprise and research environments.

Study Prompt Design Patterns: Mastering the Art and Science of Prompt Engineering — the companion field guide that codifies 24 cognitive patterns for real-world alignment, creativity, and governance.

And join the upcoming Agentic Engineering Institute (AEI) — the global professional network advancing these practices through training, certification, and shared innovation.

The next decade of AI will not be defined by who trains the largest model, but by who engineers the most aligned intelligence. That is the new competitive edge — the power to design cognition itself.

References and Further Reading

Jiayi Zhang, et al. “Verbalized Sampling: How to Mitigate Mode Collapse and Unlock LLM Diversity.” arXiv:2510.01171, October 2025.

Yi Zhou. “Agentic AI Engineering: The Definitive Field Guide to Building Production-Grade Cognitive Systems.” ArgoLong Publishing, September 2025.

Yi Zhou. “Prompt Design Patterns: Mastering the Art and Science of Prompt Engineering.” ArgoLong Publishing, 2023.

Prompt Engineering 2.0 was originally published in Agentic AI & GenAI Revolution on Medium, where people are continuing the conversation by highlighting and responding to this story.